Taking a look at python-afl

I have been using american fuzzy lop to fuzz various C and C++ programs and libraries. It is a wonderfully fast fuzzer that is generally easy to get started with and it usually finds new bugs in programs that have not been fuzzed previously. Support for american fuzzy lop instrumentation has also been added for other languages and I decided to try out how it works with Python. More specifically with the reference CPython implementation of it.

Fuzzing Python programs with american fuzzy lop

American fuzzy lop generally works by running a program that is compiled with american fuzzy lop instrumentation built in. It executes the program with afl-fuzz command that modifies the input data that is fed to the program, monitors how the program behaves, and registers everything that causes abnormal program behavior. This works well for natively compiled programs, but causes various issues with interpreted programs.

Python is by default an interpreted language, so to execute Python programs, you need to start a Python interpreter before executing your code. This means that if you would instrument the Python interpreter with american fuzzy lop instrumentation and run the interpreter with afl-fuzz, it would mostly fuzz the inner workings of the interpreter, not the actual Python program.

Fortunately there is python-afl module that enables american fuzzy lop instrumentation for just the Python code instead of instrumenting the Python interpreter. In native programs american fuzzy lop compiler wrapper (afl-gcc, afl-clang, afl-clang-fast) adds the necessary instrumentation and the connection to afl-fuzz. Python-afl is, however, designed in such way that it doesn’t try to wrap the whole program, but requires you to create a wrapper module that initializes fuzzing.

The most simple way to wrap a Python program with python-afl is to initialize python-afl and then run the program (assuming that main() function exists):

import afl

afl.init()

import fuzzable_module

fuzzable_module.main()

This script, saved to fuzz-wrapper.py, can be then run with py-afl-fuzz command that wraps afl-fuzz for Python programs:

py-afl-fuzz -m 400 -i initial-inputs/ -o fuzzing-results/ -- \

python fuzz-wrapper.py @@

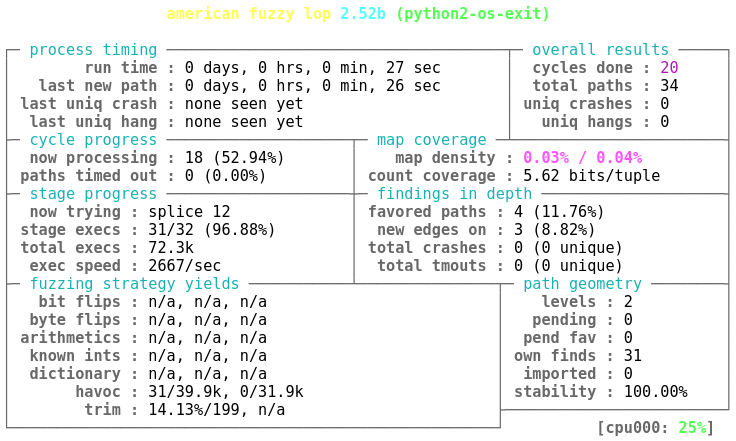

More details about these command line switches can be found from AFL readme file. This then brings out the famous american fuzzy lop status screen, but now for Python programs:

Next sections will explain in more details how to make fuzzing these programs more efficient and what pitfalls there could be in Python programs from fuzzing efficiency point of view.

Afl-fuzz modes and their python-afl equivalents

Generally afl-fuzz provides 4 fuzzing modes that differ in how the program execution between different fuzzing inputs behaves:

- Dumb mode that just executes the program by doing

fork()andexecv(). This is the slowest mode that does not rely on any fancy tricks to speed up program execution and also does not provide any insights how the program behaves with different inputs. - Basic fork server mode where the fuzzed binary does all the initialization steps that happen before calling the

main()function and then program is repeatedly forked from that point on. This also includes instrumentation that is compiled in to the program so there already is some insight on what is happening inside the program when a specific input is processed. There exists QEMU mode for afl-fuzz that technically enables fork server mode for uninstrumented binaries, but with some performance penalty. - Deferred instrumentation that works in similar fashion as the basic fork server mode. Instead forking just before calling

main()function, this enables to move the fork point further down the line and enables heavy program initialization steps to be avoided if they can be executed independently of the input. - Persistent mode where the fuzzable part of the program is repeatedly executed without resetting the program memory every time the program is called. This only works in practice if the program does not have a modifiable global state that can not be reset to the previous state.

Afl-fuzz generates new inputs and analyzes the program execution results roughly at the same speed regardless of the mode. So these modes are in the order of efficiency in a sense that how much overhead there is for fuzzing one input. They are also in the order of complexity on how easy they are to integrate into an existing program that has not been made to be fuzzed. Especially as the fastest modes require clang to be available as a compiler and the fuzzable program needs to be able to be compiled and linked with it.

Python-afl, fortunately, provides equivalent modes without having to use special tools. These are also very fast to try out, as you don’t need to compile Python programs from scratch.

The dumb mode would be just equivalent of running Python interpreter directly with afl-fuzz without any instrumentation, so we will skip over it. The more interesting part is to use the deferred instrumentation. The code in the introductory section called afl.init() before the fuzzable module was imported. This is the most safe approach, as the fuzz target might do something with the input at import time. But more realistically, Python programs generally only call import statements, possibly conditionally, during the start-up and don’t handle any user provided data yet. So in this case, we can do imports first and move the afl.init() function just before where the actual work happens:

import afl, fuzzable_module

afl.init()

fuzzable_module.main()

We can gain some speed-ups with this by calling os._exit() function instead of letting Python to exit in the usual fashion where all the destructors and other functions that are called at exit:

import afl, fuzzable_module, os

afl.init()

fuzzable_module.main()

os._exit(0)

Previous examples assume that the input file generated by the fuzzer comes as the first parameter on the command line. This is quite a good assumption, as many data processing modules for Python include a command line interface where they read and process files given on the command line. But if we can directly call the data processing function, we can instead use the standard input to feed the data:

import afl, fuzzable_module, os, sys

afl.init()

fuzzable_module.process_data(sys.stdin)

os._exit(0)

With Python 3 comes additional complexity. Python 3 processes the standard input using the encoding specified in the environment. Often in Unix environments it is UTF-8. As afl-fuzz mostly does bit manipulation, input is going to end up with broken UTF-8 data and results in exception when reading from the standard input file object. To work around this, you can use sys.stdin.buffer instead of sys.stdin in Python 3 based programs. Or create a shim that always results in raw bytes:

import afl, fuzzable_module, os, sys

try:

# Python 3:

stdin_compat = sys.stdin.buffer

except AttributeError:

# There is no buffer attribute in Python 2:

stdin_compat = sys.stdin

afl.init()

fuzzable_module.process_data(stdin_compat)

os._exit(0)

The fastest persistent mode requires that the program should not have a global state where the previous program execution affects the next one. There unfortunately is a surprising amount of global state in Python programs. It is not that uncommon to initialize some specific variables only during the program execution and then re-use the results later. This usually is harmless, but negatively affects the program stability that afl-fuzz is going to show in its status screen.

Persistent mode code for a fuzzable program could look like following including the learnings from the deferred instrumentation and its speedups:

import afl, fuzzable_module, os

try:

# Python 3:

stdin_compat = sys.stdin.buffer

except AttributeError:

# There is no buffer attribute in Python 2:

stdin_compat = sys.stdin

while afl.loop(10000):

fuzzable_module.process_data(stdin_compat)

os._exit(0)

Persistent mode stability

This section has been added 6 months after writing the original article when Jakub Wilk pointed out potential stability issues with the original persistent mode code example on OS X and FreeBSD.

Some systems where Python runs implement file manipulation by using buffered functions. The most prominent operating system where afl-fuzz runs that uses buffered I/O in Python is OS X/macOS and using the persistent mode example in the previous section does not work in Python 2 on these systems. It shows up as a low stability percentage in afl-fuzz user interface that means that the same input from afl-fuzz perspective leads program taking different paths between subsequent executions, as the input read by the program is not the same as what afl-fuzz has provided.

Buffered reads from a file work by calling low level file reading system calls with a moderately sized input buffer. This input buffer then makes sure that if we do a lot of small file system reads, like reading frame based data formats frame by frame, they will not result in as many system calls as they would result without buffering. This generally increases program performance, as programmers can rely on file system functions to work relatively fast even with non-optimal access patterns.

You need to set the stream position to the beginning when using persistent mode with afl-fuzz on systems that use buffered I/O and you are reading from the standard input. All these examples read data from the standard input, as it is generally more efficient than opening a new file for each fuzzing iteration. Rewinding the stream position to the beginning by using file.seek(0) method.

Unfortunately not all streams support seeking and with afl-fuzz the situation is more tricky. If you execute the program normally and read from sys.stdin, by default the standard input does not support seeking. But when you run it through afl-fuzz, the standard input is in reality a file descriptor that is backed up by a file on a file system. And this file supports seeking. So you need some extra code to detect if the standard input supports seeking or not:

import afl, fuzzable_module, os

try:

# Python 3:

stdin_compat = sys.stdin.buffer

except AttributeError:

# There is no buffer attribute in Python 2:

stdin_compat = sys.stdin

try:

# Figure out if the standard input supports seeking or not:

stdin_compat.seek(0)

def rewind(stream): stream.seek(0)

# In Python 2 and 3 seek failure exception differs:

except (IOError, OSError):

# Do nothing if we can not seek the stream:

def rewind(stream): pass

while afl.loop(10000):

fuzzable_module.process_data(stdin_compat)

rewind(stdin_compat)

os._exit(0)

The effect of adding standard input rewinding more than doubles the fuzzing overhead for the persistent mode on the Debian GNU/Linux system that I have used to do these benchmarks. On OS X the effect is less than 10 % overhead increase for Python 3 and is required for Python 2 to even work with afl-fuzz on OS X.

Benchmarking different afl-fuzz modes

I wanted to measure how these different afl-fuzz modes behave with Python. So I created a small fuzz target whose main algorithm does some conditional computation based on the input data and prints out the result:

def fuzz_one(stdin, values):

data = stdin.read(128)

total = 0

for key in data:

# This only includes lowercase ASCII letters:

if key not in values:

continue

value = values[key]

if value % 5 == 0:

total += value * 5

total += ord(key)

elif value % 3 == 0:

total += value * 3

total += ord(key)

elif value % 2 == 0:

total += value * 2

total += ord(key)

else:

total += value + ord(key)

print(total)

This is just to exercise the fuzzer a little bit more than a trivial function that does nothing would do. I also created an equivalent fuzz target in C++ to give numbers to compare what kind of an overhead different fuzzing modes incur for both Python and native applications. The approximate results are summarized in table 1. The actual scripts used to generate this data are available from following links: measure-times.sh, target-simple.template.py, and target-simple.cpp.

| Python 2 | Python 3 | Native | |

|---|---|---|---|

| dumb mode | 110/s | 47/s | 1200/s |

| pre-init | 130/s | 46/s | 5800/s |

| deferred | 560/s | 260/s | 6800/s |

| quick exit | 2700/s | 2100/s | 8700/s |

| rewinding persistent mode | 5800/s | 5900/s | - |

| persistent mode | 17000/s | 15000/s | 44000/s |

What these results show, it is possible to make fuzzable program in Python in such way that the fuzzing start-up overhead is only three to four times larger than for a native one with afl-fuzz. This is an excellent result considering that generally algorithms implemented in Python can be considered 10-100 times slower than ones implemented in C family languages. But if you want to use Python for performance critical tasks, you are anyways using Cython or write performance critical parts in C.

There is a clear performance difference between Python 2.7.14 and Python 3.6.4 especially when all start-up and exit optimization tricks are not in use. This difference is also visible in Python start-up benchmarks at speed.python.org. This difference gets smaller when the persistent mode is used as Python executable is not shut down immediately after processing one input. What can also help Python 3 in the persistent fuzzing mode is the fact that the tracing function that python-afl sets with sys.settrace() is called only half as often with Python 3 is it is called with Python 2 for this fuzz target.

More speed for repeated Python executions

Python enables imports and other type of code loading at any phase of the program execution. This makes it quite possible that program has not fully loaded before it is executed for the first time. This is usually used as a configuration mechanism to configure the program based on the runtime environment and other type of configuration. It also provides the possibility to write plugins, similarly to what dynamically loaded libraries provide.

You can see from table 1 how the fuzzing overhead decreases a lot when the fuzzing start point moved after import statements for this simple example program. So when I was fuzzing an old version of flake8, I tried to see if it had any hidden configuration statements that would execute and cache their results for repeated calls. And it did!

Initially I used following type of fuzzing wrapper for flake8:

import afl, sys, flake8.run

afl.init()

flake8.run.check_code(sys.stdin.read())

It is basically a simple wrapper that imports all what it needs and then fuzzes what is fed from the standard input to the program. But the performance of this was horrible, around 15 executions/second. So I tried to see what happens when I change the code a little bit by calling the flake8.run.check_code() function with an empty string before setting the fuzzing starting point:

import afl, sys, flake8.run

# Make sure that the hidden runtime configuration is executed:

flake8.run.check_code("")

afl.init()

flake8.run.check_code(sys.stdin.read())

This doubled the execution speed to around 30 executions/second. It is still quite slow for the small inputs that afl-fuzz initially creates, but an improvement nonetheless. I looked at what flake8 does when it is executed and a following line popped up:

from pkg_resources import iter_entry_points

There basically is a hidden import statement in the execution path whose result is cached after the first time it is encountered. Also this pkg_resources.iter_entry_points() function is used to configure the program at runtime and that also adds some extra overhead to the process.

Flake8 also by default tries to execute checks in parallel with multiprocessing module. This might be a good idea when you have multiple files to verify at once, but during fuzzing it just adds unneeded overhead. Also the fact that it starts a new process makes the fuzzer lose all information about what is happening in the subprocess. Fortunately in flake8 it was possible to override the detected multiprocessing support by just setting one variable into False and then flake8 will act as there is no multiprocessing support. This increased the average fuzzing speed of flake8 by threefold.

The final speedup with flake8 came when looking at how flake8 is constructed. It is basically a wrapper around mccabe, pycodestyle, and pyflakes packages. So rather than fuzz flake8, it is much more productive to create a fuzz target for each one of those packages individually. I did this for pycodestyle and ended up finally executing it with around 420 executions/second for trivial data and around 200 executions/second for more realistic. So basic recommendations on how to fuzz native programs also apply for Python.

Monkey patching around fuzzing barriers

As american fuzzy lop does not know almost anything about the input, it can encounter various impediments (see section 13 from afl’s README.txt) when the file format includes any values that depend on the previously encountered data. This is especially problematic with checksums and is also an issue with other mutation based fuzzers. To work around this the C world, you can generally use C preprocessor to turn off checksum verification when such thing is encountered. This also applies for all other types of barriers that might skew fuzzing results, like random number usage.

Unfortunately Python does not have preprocessor support by default, so this type of conditional compiling is out of the question. Fortunately Python provides the possibility to do monkey patching where you can replace functions or methods at runtime. So to make a library more fuzzer friendly, you can monkey patch all functions related to data verification to always return True, or return some constant value when checksums are considered.

I used this approach to fuzz python-evxt 0.6.1 library. Python-evxt is a library to parse Windows event log files and is one of the first hits when you search Python Package Index with “pure python parser” keyword. The file format includes CRC32 checksum that will prevent the fuzzer from being able to meaningfully modify the input file, as almost all modifications will create an incorrect checksum in the file.

To monkey patch around this issue, I searched the source code for all functions that potentially have anything to do with checksum generation and made them always to return a constant value:

import Evtx.Evtx

checksum_patches = (

(Evtx.Evtx.ChunkHeader, "calculate_header_checksum"),

(Evtx.Evtx.ChunkHeader, "header_checksum"),

(Evtx.Evtx.ChunkHeader, "calculate_data_checksum"),

(Evtx.Evtx.ChunkHeader, "data_checksum"),

(Evtx.Evtx.FileHeader, "checksum"),

(Evtx.Evtx.FileHeader, "calculate_checksum"),

)

for class_obj, method_name in checksum_patches:

setattr(class_obj, method_name, lambda *args, **kw: 0)

The checksum_patches variable holds all the functions that you need to overwrite to ignore checksums. You can use setattr() to overwrite these methods on class level with an anonymous function lambda *args, **kw: 0 that always returns 0 and takes any arguments. Taking any number of arguments in any fashion is enabled with *args and **kw and this syntax is explained in keyword arguments section on Python’s control flow tools manual.

Takeaways

I was impressed on how low fuzzing overhead Python programs can have when compared to C family languages. When looking at how python-afl is implemented, it becomes quite clear that the deferred mode of afl-fuzz has a great part in this where the whole Python environment and program initialization is skipped between different fuzzing runs.

Making a Python module fuzzable was also more easy than in C family languages. Although american fuzzy lop is already the easiest fuzzing engine to get started with, the fact that it uses clang to do the more advanced tricks often gives headaches when trying to get software to compile. The fact that I did not have to use any modified Python interpreter to get started but only had to import afl module made me to realize how many steps I skipped that I normally have to do when using american fuzzy lop on new systems.

Thanks to Python’s dynamic execution and monkey patching capabilities I could also try out fuzz target creation with external libraries without having to actually modify the original code. Especially selecting some specific functions to fuzz and overriding checksum generation would generally require nasty software patches with C family languages. Especially if there is main() function to override.

It was also nice to realize that the os._exit(0) equivalent in standard C library function call _Exit(0) could help make light native fuzz targets even faster. The process cleanup in this relatively trivial C++ program adds almost 30% overhead for repeated program execution. Although it will likely break any sanitizer that does any verification work during the exit, like searching for dynamically allocated memory that was not freed.