Articles

-

afl-fuzz on different file systems

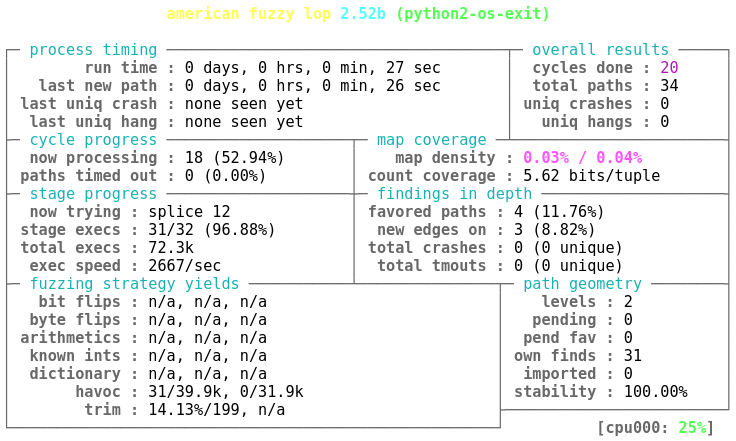

One day I was fuzzing around with american fuzzy lop and accidentally pointed the output directory for fuzzer findings to point onto a file system on a physical disk instead of the usual shared memory file system. I noticed my mistake and changed the output directory to the usual place. Consequently there was a clear difference on how many fuzzing iterations/second

afl-fuzzprogram could do. This then lead into this comparison of different file systems and fuzzing modes on them with the workload thatafl-fuzzhas.Hardware and software background

Fuzzing is a computationally intensive way to find bugs in programs, usually fully exercising the machine CPU. This and writing data to the disk can have adverse effects on the hardware even if we are working with software. Some risks are listed in the common-sense risks section at american fuzzy lop’s README.txt file that is a good read about the subject. In this article I’m mainly interested in what happens when you happen to point the output of

afl-fuzzto a device with a specific file system.afl-fuzz as part of american fuzzy lop

American fuzzy lop is a successful generic purpose fuzzer that finds bugs for you while you sleep.

afl-fuzzis the executable program that does the hard work of generating new data, repeatedly running the target program (fuzz target), and analyzing the results that come up in these fuzz target executions. It has handcrafted heuristics and fuzzing strategies that in practice provide quite successful results without the need for tuning.Figure 1 roughly visualizes the different parts that are part of a fuzzing session when using

afl-fuzz. It spawns the program that we try to fuzz. This program then creates a fork server that is responsible for communicating with the mainafl-fuzzexecutable over pipes and spawning new fuzz target instances withfork()call. The fork server actually a small shim that is part of the fuzz target. Also the new program instance thatfork()call spawns still technically holds a reference to the fork server, but those parts are just active at different times, visualized as gray in the figure. If the fuzz target is in persistent mode, it doesn’t get restarted for every new input.Data is passed to the fuzz target either over standard input or over a newly created file that the fuzz target reads. Then the fuzz target executes itself by writing an execution trace to a shared memory and finishes running.

afl-fuzzthen reads the trace left by the fuzz target from the shared memory and creates a new input by mutating old ones. What to mutate is controlled by the new and historical information that the instrumentation data from the fuzz target provides.Solid state drives

Solid state drives are nowadays the most common physical storage medium in generic and special purpose computers. They are fast and relatively decently priced for the storage needs of a regular software developer. The decently priced solid state drives come with a price of somewhat limited write endurance due to the properties of the physical world and manufacturing compromises.

Currently (early 2018) the write endurance of a solid state drive promises to be around half in terabytes than what the drive capacity is in gigabytes on consumer level drives using (TLC NAND). This means that 200-250 gigabyte SSD has a write endurance around 100 terabytes. In practice, the write endurance will likely be more at least on a test from 2013-2015.

Such write endurance does not really pose any limitations in the usual consumer level and professional workloads. But when it comes to fuzzing, it’s not the most usual workload. The whole idea of a fuzzer is to generate new data all the time, make the program execute it, and then either discard or save it depending of the program behavior is interesting or not. So we can expect that a fuzzer will generate quite a lot of data while it’s running.

Quick back-of-the-envelope calculation when assuming 10 parallel fuzzers running 1000 executions/second with 1000 byte input/execution on average would result in over 20 terabytes of data generated every month. If all this is directly written to a drive, then we can expect to have around 5 months until we reach the promised write endurance limits of a typical solid state drive at the time of this writing. There is caching that works to prolong this, but then there are all the invisible file system data structures, minimum file system block sizes, and write amplification then fight against these savings.

Two data passing modes of afl-fuzz

afl-fuzzprogram has two ways to pass the new input to the fuzz target: passing the data over the standard input and creating a new file with the new data and having the fuzz target to read the data from the just created file file.The most compact

afl-fuzzcommand only includes the information for initial inputs, output directory and the command to execute the fuzz target without any special arguments:$ afl-fuzz -i in/ -o out/ -- fuzz-targetThe fuzz target gets the input data from its standard input file descriptor so it does not need to explicitly open any files. Technically the standard input of the child process is backed by a file that is always modified by the

afl-fuzzprocess when new input it generated. This file is also.cur_inputfile at the fuzzer instance directory. The exact details of how this works are explained later.The more explicit input file path information including commands look like this:

# "@@" indicates the name of the file that the program gets with new # fuzz data. "-f" can be used to hard code the input file location # that then can be passed to the fuzz target or not. $ afl-fuzz -i in/ -o out/ -- fuzz-target @@ $ afl-fuzz -i in/ -o out/ -f target.file -- fuzz-target @@ $ afl-fuzz -i in/ -o out/ -f target.file -- fuzz-targetIn the first command there is no explicit path to the input file specified, so the target program will get the path as the first argument, indicated by

@@. The actual file is located at the fuzzer instance output directory (here it’sout/) as.cur_inputfile. Second two forms with-f target.fileswitch make it possible to define the location of the input file so that it can reside outside the output directory.Details of input data writing

Now that we know that

afl-fuzzprovides two different ways for the data to end in the program, then let’s look at the extracted details of write_to_testcase() function to see how this data is passed to the child process.When

afl-fuzzwrites data into the standard input stream of the fuzz target, the main parts of the code look like this in bothafl-fuzzand fuzz target side:// afl-fuzz part: { // Make sure that reads done by fuzz target do not affect // new testcase writes: lseek(testcase_fd, 0, SEEK_SET); // Write new testcase data to the file: write(testcase_fd, testcase_data, size); // Make sure that old data in testcase file does not leak // into the new fuzzing iteration: ftruncate(testcase_fd, size); // Make read() act as it would read a fresh standard input // instance: lseek(testcase_fd, 0, SEEK_SET); } // Fuzz target part: { // stdin file descriptor inside fuzz target is actually // identical to testcase_fd thanks to dup2(). So we just // read whatever happens to be there: ssize_t read_size = read(testcase_fd, buffer, sizeof(buffer)); // Do some actual fuzzing: fuzz_one(buffer, read_size); }What happens in here that first the position in the file is changed to the beginning. A new data is written over the old one. The file length is changed to correspond to the new data length. The position is set to the beginning of the file again. Then the fuzz target reads from this file descriptor and runs the just written data through the actual program logic.

When passing data to the program through a named file following types of operations happen:

// afl-fuzz part: { // Remove the old output file. unlink(out_file); // Create a new file with the same name. int out_fd = open(out_file, O_WRONLY | O_CREAT | O_EXCL, 0600); if (out_fd < 0) { abort(); } // Write the test data into the created file. write(out_fd, data, size); // Close the created file so that the data is available to other // processes. close(out_fd); } // Fuzz target part: { // Open the file created by afl-fuzz. int in_fd = open(out_file, O_RDONLY); if (in_fd < 0) { abort(); } // Read enough data from the opened file descriptor for fuzzing. ssize_t read_size = read(in_fd, buffer, sizeof(buffer)); // Close the opened file descriptor so that we don't leak // resources. close(in_fd); // Do some actual fuzzing: fuzz_one(buffer, read_size); }Basically old input file is removed and a new file is created, data is written to it and it’s closed. Then the fuzz target opens the just created file, reads the data out of it and closes the opened file descriptor closes it, and runs the data through the actual program logic.

You can already see that there are more file system related functions used when the fuzz data file is recreated. Then these functions are also more heavy than the ones used in the standard input version. When you call

unlink()andopen(), Linux kernel needs to do pathname lookup to figure out what exact file objects are accessed. When you only have the file descriptor to manipulate in the standard input case, you avoid these pathname lookups and hopefully manipulate purely numeric data. Also when you open a new file, it has to actually create the corresponding inodes and their data structures to the file system. This has a certain amount of overhead.So looking at the called functions, it would feel like there is going to be a fuzzing overhead difference between these two input data creation approaches.

Benchmarking

Theoretical speculation is always nice, but the real hard data comes from the benchmarking. This section looks at the fuzzing overhead from both

afl-fuzzperspective with low and high level filesystem access functions and from raw file system access perspective. Also the section about data writes tries to find an answer to the question that how damaging fuzzing can actually be to a solid state drive with a relatively limited write endurance.I did these benchmarks with several major general purpose file systems found from Debian provided Linux kernels 4.14.13-1 and 4.15.11-1. These can show up as differences in numbers between tables, but numbers inside the same table are done with the same system. Also there is small variance between fuzzing sessions that I have tried to eliminate running the fuzzers for long enough that system maintenance and other short running tasks don’t have too big of an impact. But the relative mileage may vary between kernel versions, operating system libraries, and between the actual physical machines.

Executions/second

General purpose instrumentation guided fuzzers like american fuzzy lop and libFuzzer get their power from the fact that they can repeatedly execute the fuzz target in quick succession. Speed is one factor, but also the program stability from one execution to another is a second one. Having stable program ensures that the issues that fuzzing finds can be easily replicated. This basically leads into a compromise of selecting the appropriate fuzzer and fuzz target execution strategy.

It can be that the program has a global state that needs program restart or other tricks between runs. For these types of situations the default forkserver mode in

afl-fuzzis appropriate. On the other hand if everything can be functionally wrapped inside a function that does not leak its state outside, we can use the much faster persistent mode inafl-fuzz. From this it should be actually quite easy to port the fuzz target to libFuzzer.In this case libFuzzer shows 735 k executions/second with the sample target when the data is not passed between process boundaries. It is also possible to simulate in-memory file streams with

fmemopen()function where libFuzzer achieved with this example program 550 k executions/second. This is 15-20 times lower fuzzing overhead than withafl-fuzz. But in this article we focus on american fuzzy lop’s persistent mode and leave the fuzzing engine selection for some other time.A typical american fuzzy lop’s persistent mode fuzz target would generally have some generic configuration in the beginning and then the actual data dependent program execution would reside inside a

while (__AFL_LOOP(40000)) { ... }loop. The number 40000 is just the number of iterations that the program does before it restarts again and can be lower or higher depending on how confident you are that the program does not badly misbehave.Using the standard input with the persistent mode makes the data reading code look like following:

generic_configuration(argc, argv); int input_fd = fileno(stdin); while (__AFL_LOOP(40000)) { ssize_t read_size = read(input_fd, buffer, sizeof(buffer)); if (read_size < 0) { abort(); } // Do the actual fuzzing. fuzz_one(buffer, read_size, &values); }Using named files with the persistent mode on the other hand requires opening a new file every iteration:

generic_configuration(argc, argv); while (__AFL_LOOP(40000)) { int input_fd = open(argv[1], O_RDONLY); ssize_t read_size = read(input_fd, buffer, sizeof(buffer)); if (read_size < 0) { abort(); } close(input_fd); // Do the actual fuzzing. fuzz_one(buffer, read_size, &values); }The example fuzz target

I directly used the code from my Taking a look at python-afl article and modified it to support the file and stdin reader variants. This code is available from target-simple.cpp for the prying eyes. Also the later on introduced C and C++ standard library variants are available as target-fread.cpp and target-ifstream.cpp.

File system dependent results

The benchmarking was done with a loopback device that fully resides in memory. Each of these file systems was created and mounted with their default options and the performance test was run for 2 minutes. Only a single instance of

afl-fuzzwas running. You can see from the table 1 the difference between the same workload on different file systems. The shared memory virtual file system (tmpfs) was the most efficient in both of these cases.This result also shows an interesting history between ext2, ext3, and ext4 file systems. Their performance seems to decrease the newer the file system is, likely due to larger amount of data safety operations that the newer file systems do. There could be difference when parallel access to a file system is concerned.

Execution speed with different standard libraries

I also wanted to see how the use of more portable file system calls in both C and C++ standard libraries affects the fuzzing speed. Standard libraries like glibc, libstdc++, and libc++ provide a portable file system interface across operating systems. They also provide a certain amount of buffering so that every small read does not result in a system call and suffer from context switch overhead. These higher level library functions also aim to be thread safe so that concurrent manipulation of the same file descriptor would be a little bit less surprising.

When doing fuzzing, we are usually just reading small amount of data once and then either close the input file or expect the

afl-fuzzto reset the input stream for us. Any buffering information must also be discarded and the stream position must be reset so that the input data for the fuzz target is the same whatafl-fuzzexpects it to be. If it’s not, it will show as a lower than 100 % stability value on AFL status screen.Following is the C code used to benchmark how much overhead the standard C library functions bring into this. Standard input is put into unbuffered mode with

setvbuf()function call, so buffer manipulation should not have a meaningful amount of overhead.// C version for glibc benchmarking with standard file manipulation functions. FILE* input_fd = stdin; setvbuf(input_fd, NULL, _IONBF, 0); while (__AFL_LOOP(40000)) { size_t read_size = fread(buffer, 1, sizeof(buffer), input_fd); fuzz_one(buffer, read_size, &values); } // We get the fuzz data filename from argv[1]. while (__AFL_LOOP(40000)) { FILE* input_fd = fopen(argv[1], "rb"); size_t read_size = fread(buffer, 1, sizeof(buffer), input_fd); fclose(input_fd); fuzz_one(buffer, read_size, &values); }C++ streams don’t have a working unbuffered reads that would behave reliably. The closest equivalent to this is to call

istream::seekg()and after thatistream::clear()function. This basically is theoretically equivalent to whatrewind()function does. Other solution of trying to setistream::rdbuf()to a zero sized one had no real effect on increasing stability.C++ code for these fuzzing benchmarks looks like following:

// C++ version for libstdc++ and libc++ benchmarking with the standard // C++11 file manipulation functions. auto input_fd = std::cin; while (__AFL_LOOP(40000)) { // Resetting stream state is 2 function calls. input_fd.seekg(0, input_fd.beg); input_fd.clear(); input_fd.read(buffer, sizeof(buffer)); size_t read_size = input_fd.gcount(); fuzz_one(buffer, read_size, &values); } // We get the fuzz data filename from argv[1]. while (__AFL_LOOP(40000)) { std::ifstream input_fd( argv[1], std::ifstream::in | std::ifstream::binary); input_fd.read(buffer, sizeof(buffer)); size_t read_size = input_fd.gcount(); input_fd.close(); fuzz_one(buffer, read_size, &values); }C++ code is a little bit more verbose than C code, as there are extra layers of abstraction that need to be removed so that

afl-fuzzstyle data processing is possible. Table 2 then shows what is the speed difference of these different implementations and standard libraries.We can see that the pure combination of

open()andread()functions is the fastest one. Portable C standard library implementation comes quite close with the unbuffered standard input implementation, but everywhere else there is a clear performance loss.Stability is an important measurement of how reliably fuzzing can continue. Unfortunately in this tests everything was not 100 % stable. The standard input reading and resetting for libc++ does not fully work as expected and leads into reading old data.

The conclusion here is that try to stay with the low level unbuffered file manipulation functions or as close to their equivalents. More portable higher level functions for file access may provide speed benefits through buffering for regular file access patterns, but in this case they just add extra layers that slow down the data passing from

afl-fuzzto the fuzz target. Unless the fuzz target itself relies on using those higher level functions.Data writes

In the background section I described how it generally is a bad idea to write data to a physical disk, as modern solid state drives have a limited write durability. But one could think that the write caching done by Linux would prevent a situation from happening where data is constantly written to the disk if the lifetime of a file contents is really short.

The write caching towards block devices is handled by the page cache in the kernel. But as different file systems may write every new file or file part into a new location, this cache is not necessarily as effective as it could be. Even if the writes happen to the same file with very little data.

In this section I let

afl-fuzzto run for 30 minutes and looked at the kilobytes written byiostatto a block device that was a file in memory that was mounted on a loopback device. This way the device should have looked like a real block device without the risk of shortening the lifespan of any real hardware. Estimated monthly values for disk writes for various file systems can be seen from table 3 for a singleafl-fuzzinstance.The numbers for the amount of data that would be written to the device is an extrapolation of the 30 minute run and show values are in terabytes (240 bytes). These numbers are for the persistent mode and for an extremely light algorithm. They still give some estimate on the amount of data writes to the disk even for algorithms that are 10-100 times slower. Nowadays a typical desktop computer can easily have 8-16 threads and the order of typically processed data per fuzzer iteration is closer to 1 kilobyte than to 128 bytes as in this case.

When looking at these numbers, btrfs, JFS, and XFS file systems are pretty dangerous ones to accidentally use, as the faster stdin mode where data is fed to always open file handle actually causes a meaningful amount of data to be written to a disk per month. Especially with XFS it’s 4 kilobytes of data written/fuzzing iteration. Taking into account that many solid state disk drives have write endurance only in hundreds of terabytes, accidentally running even one fuzzer for one month or more makes a significant dent in its lifetime for these file systems.

I also wanted to see what happens when you run multiple fuzzers in parallel on the same machine. The most trivial assumption for multiple fuzzers would be that the file system writes would increase about linearly with the number of fuzzers. The results on table 4 show that this is indeed the case for most of the file systems, except for ext2. Fortunately ext2 is not the worst data writer of the measured file systems and you really need to go extra mile to use it nowadays, as newer ext3 and ext4 file systems have replaced it by default.

writes/month stdin x4 file x4 btrfs 9.53 TiB (78 %) 0.052 TiB (65 %) ext2 0.072 TiB (450 %) 0.120 TiB (600 %) ext3 0.075 TiB (94 %) 0.147 TiB (130 %) ext4 0.090 TiB (64 %) 0.088 TiB (100 %) JFS 44.4 TiB (110 %) 339 TiB (120 %) ReiserFS 0.069 TiB (82 %) 0.072 TiB (75 %) tmpfs - - XFS 786 TiB (98 %) 0.035 TiB (220 %) Table 4: Monthly data writes for a 4 parallel fuzzers on different file systems and the percentage to an extrapolated number based on one afl-fuzz instance. The worrying part here is that two quite actively and widely used file systems, btrfs and XFS, suffer greatly from the writes with the standard input data generation pattern. As this is the faster mode of fuzzing from executions/second perspective, it’s quite nasty if you put the output directory for fuzzer findings on such file system.

Theoretical limits

I also wanted to see what would be the theoretical limits for the type of file system access pattern that

afl-fuzzdoes. This is quite a synthetic benchmark, as it does not involve any context switches between processes, signal delivery, shared memory manipulation, file descriptor duplication, and other types of data processing thatafl-fuzzdoes.The difference between file systems in table 5 is many times larger for standard input based data generation than for

afl-fuzz(table 1). When opening and closing files, the overhead is bigger and the difference between different file systems is also smaller.If you also compare the overhead of pure file system accesses, it is higher than the overhead of libFuzzer’s 735 k executions/second basically for all cases. On top of this there is the overhead of everything else that happens inside

afl-fuzzprogram.End notes

You should use tmpfs that fully resides in memory for your fuzzing data generation with

afl-fuzz. Usually this is available on/dev/shm/or/run/shm/globally and on systemd based systems also available under/run/user/$UID/. It’s faster and less straining to the hardware than trusting that the Linux block layer does not too often write to the physical device. Especially if you happen to use btrfs or XFS file systems.In the end, this is mostly Linux specific information and for example OS X based systems need specific tricks to use memory based file systems. Same with FreeBSD where tmpfs based file system is not mounted by default.

-

Avatars, identicons, and hash visualization

Oftentimes we have a situation where we have a situation where we want an easy way to distinguish people of which we only know the name or a nickname. Probably the most common examples of these are distinguishing different people from each other on various discussion platforms, like chat rooms, forums, wikis, or issue tracking systems. These very often provide the possibility to use handles, often real names or nicknames, and profile images as an alternative. But as very often people don’t provide any profile image, many services use a programmatically generated images to give some uniqueness to default profile images.

In this article I’ll take a look at a few approaches to generate default profile images and other object identifiers. I also take a look at the process of creating some specific hash visualization algorithms that you may have encountered on the web. The general name for such programmatically generated images is hash visualization.

Use cases and background

A starting point to visualize arbitrary data is often hashing. Hashing in this context is to make a fixed length representation of an arbitrary value. This fixed length representation is just a big number that can be then used as a starting point for distinguishing different things from each other.

Hash visualization aims to generate a visual identifier for easy differentiation of different objects that takes an advantage of preattentive processing of human visual system. There are various categories that human visual system uses to do preattentive processing, like form, color, motion, and spatial position. Different hash visualization schemes knowingly or unknowingly take advantage of these.

Simple methods use specific colors as a visualization method, but at the same time limit the values that can be preattentively distinguished to maybe around 10-20 (around 4 bits of information) per colored area. Using additional graphical elements provides a possibility to create more distinct unique elements that are easy to separate from each other. One thesis tries hash visualization with 60 bits of data with varying success. So the amount of visualized data that can be easily distinguished from each other by graphical means is somewhere between 4 and 60 bits.

Avatars

Perhaps the most common way to distinguish different users of an Internet based service is to use an user name and an avatar image. Avatars often go with custom avatar images that users themselves upload to the service. In case the service itself does not want to host avatars, it can link to services like Gravatar that provides avatar hosting as a service. This way user can use the same avatar in multiple services just by providing their email address.

But what about a situation where the user has not uploaded or does not want to upload an avatar image to the service? There usually is a placeholder image that indicates that the user has not set their custom avatar.

There seem to be three major approaches to generate this placeholder image:

- A generic dummy placeholder image. These often come in a shape of a generic human profile.

- An image from predefined collection of images. These are usually related to a theme that the site wants to present.

- A partially or fully algorithmically generated image. These usually make it possible to get the most variety for placeholders with the least amount of work.

Algorithmically generated images vary greatly and usually come in three varieties:

- Simple images that mainly use a color and a letter to distinguish users.

- Images that consist of a set of a predefined parts and color variations.

- More complex shapes usually created fully algorithmically.

I have taken a closer look at different algorithmic avatar creation methods in form of WordPress identicon, GitHub identicon, and MonsterID. These provide an overview on how algorithmic avatar generation can work. There is also a chapter of using a character on a colored background to give an example of a simple algorithmic method for default avatar generation.

I want to focus here on visualizing the few methods that I have encountered rather than having a all-encompassing overview of avatar generation. For that, there is Avatar article on Wikipedia that gives a better textual overview of these and many more methods than I could give.

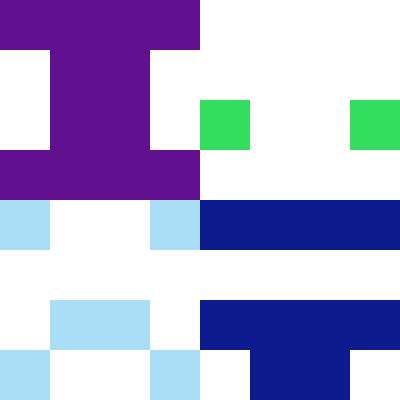

Character on a colored background

Services like Google Hangouts, Telegram and Signal, and software with people collaboration functionality like Jira generate a default avatar for an user by using the first letter of user’s name with a colored background. This is simple method where you need to select a font, a shape that you want to use to surround the letter, and size of the avatar. Some example avatars generated by this method are visible from figure 1.

WordPress identicon

WordPress identicon has been a well known algorithmically generated avatar for quite long time on web forums. It has been used to distinguish between users based on their IP addresses and email addresses. Example WordPress identicons can be seen from figure 2 where you should be able to notice that there is some symmetry in these images.

Looking at the code how these avatars are generated reveals 44 patterns, shown in figure 3 that the generators uses to generate these images. They are symmetrically placed around the center and rotated 90 degrees when they reach the next quadrant. This process is illustrated in figure 4 that shows how these parts are placed and rotated around the center. In case the requested identicon has an even number of parts, these parts will get duplicated. So 4x4 WordPress identicon will only have 3 distinct parts in it. This is the same number as for 3x3 WordPress identicon.

GitHub identicon

GitHub is a great collaborative code repository sharing service where users can have identifying images. If user, however, does not have any profile image, GitHub will generate an identicon that forms a 5x5 pixel sprite. Figure 5 examples how such identicons can look like. The exact algorithm that GitHub uses to generate these identicons is not public, so these example images just imitating the style of GitHub identicons.

Figure 6: GitHub style identicon creation process animated. Visible pixels are horizontally mirrored. Figure 6 shows the process of creating such identicon. Basically the given data is hashed and from that hash a specific color is selected as the value for a visible pixel. Then other part of the hash (15 bits) is used to form an image that is horizontally mirrored. I have used a modified ruby_identicon to generate these images and animations. This library also enables the generation of other sizes than 5x5 sprites.

MonsterID

WordPress MonsterID is a hash visualization method where hashes are converted to various types of monsters. It’s a one type of hash visualization method where lifelike creature is created from predefined body parts. WordPress MonsterID actually offers two types of monsters, the default ones and artistic ones. Here I’m showing some example artistic monsters in figure 7 just because they look better than the default ones.

Figure 8: Creating an artistic MonsterID image by selecting and colorizing an appropriate part from each category. Figure 8 shows the MonsterID creation process. First there is a lightly colored background on top of which we start forming the monster. We select a body part in order of legs, hair, arms, body, eyes, and mouth. These parts are show in figure 9. Then assign a color to the selected part, unless it’s one of the special cases. Then just overlay it on top of the image formed from previous parts. There are special cases that what parts should have a predefined color or be left uncolored, but that does not change the general monster creation process.

.)

Figure 9: Parts from which a MonsterID is created divided into categories (hair, eyes, mouth, arms, body, and legs). In addition to WordPress MonsterID plugin, Gravatar also supports old style MonsterIDs. These unfortunately do not as good as the artistic monsters in the WordPress MonsterID plugin and there is no option to select an alternative form for these avatars.

OpenSSH visual host key

Hash visualization has also its place in cryptography. OpenSSH client has an option to display so called visual host keys.

When a SSH client connects to a remote server that it has not ever seen before, it needs to accept the public key of this server so that it can communicate with it securely. To prevent man-in-the-middle attacks, user initiating the connection is supposed to verify that the host key indeed matches. An example message about host key verification request is shown in figure 10. The idea is, that either you have seen the this key beforehand or you have some other means to get this key fingerprint and verify that it indeed belongs to the server you are trying to connect to. How this works in practice or not, is a completely different discussion.

$ ssh example.com The authenticity of host 'example.com (127.1.2.3)' can't be established. RSA key fingerprint is SHA256:Cy86563vH6bGaY/jcsIsikpYOHvxZe/MVLJTcEQA3IU. Are you sure you want to continue connecting (yes/no)?

Figure 10: The default SSH server key verification request on the first connection. As you can see, the key fingerprint is not easy to remember and also the comparison of two fingerprints can be quite hairy. When you enable visual host key support, as show in figure 11, the idea is that you can quickly glance to figure out if the key of a server is different than expected. This should not be treated as a method to see if two keys are equal, as different keys can produce the same image.

$ ssh -o VisualHostKey=true example.com The authenticity of host 'example.com (127.1.2.3)' can't be established. RSA key fingerprint is SHA256:Cy86563vH6bGaY/jcsIsikpYOHvxZe/MVLJTcEQA3IU. +---[RSA 8192]----+ | ..o.=+ | | . E. | | . . | | . o | |o o + S o | |.+ o o + * | |o.. .o..B.o | |.o o.*BB= . | |+ ...=o@@+o | +----[SHA-256]----+ Are you sure you want to continue connecting (yes/no)?

Figure 11: Visual host key enabled SSH server key verification request on the first connection. The algorithm to generate these visual host keys is visualized at figure 12. It’s also described in detail in an article that analyzes the algorithmic security of this visual host key generation: The drunken bishop: An analysis of the OpenSSH fingerprint visualization algorithm.

OpenSSH’s visual host key is formed in such way that the formation starts from the center of the image. Then 2 bits of the host key fingerprint are selected and the location is moved in a diagonal direction. The visual element on the plane where this movement happens is changed based on how many times a certain location is visited. This can be better seen from the animation where each visual host key generation step is visualized as its own frame and where the bits related to the movement are highlighted.

The exact details how the key is generated can be seen from OpenSSH’s source code. This source code also refers to a paper called Hash Visualization: a New Technique to improve Real-World Security. But the fact that OpenSSH is usually limited to terminals with text interface makes it quite hard to use the more advanced Random Art methods described in the paper. But the idea for host key verification by using images has at least taken one form in the OpenSSH world.

Conclusions

I presented here some methods that I have encountered over the years on various sites and programs. I can not possibly cover all of them, as there likely are as many algorithms to create avatars as there are people inventing them. The biggest question with any of these algorithms is that how many bits they should try to visualize and what types of graphical elements they can use in the visualization without just creating indistinguishable clutter.

-

Taking a look at python-afl

I have been using american fuzzy lop to fuzz various C and C++ programs and libraries. It is a wonderfully fast fuzzer that is generally easy to get started with and it usually finds new bugs in programs that have not been fuzzed previously. Support for american fuzzy lop instrumentation has also been added for other languages and I decided to try out how it works with Python. More specifically with the reference CPython implementation of it.

Fuzzing Python programs with american fuzzy lop

American fuzzy lop generally works by running a program that is compiled with american fuzzy lop instrumentation built in. It executes the program with afl-fuzz command that modifies the input data that is fed to the program, monitors how the program behaves, and registers everything that causes abnormal program behavior. This works well for natively compiled programs, but causes various issues with interpreted programs.

Python is by default an interpreted language, so to execute Python programs, you need to start a Python interpreter before executing your code. This means that if you would instrument the Python interpreter with american fuzzy lop instrumentation and run the interpreter with afl-fuzz, it would mostly fuzz the inner workings of the interpreter, not the actual Python program.

Fortunately there is python-afl module that enables american fuzzy lop instrumentation for just the Python code instead of instrumenting the Python interpreter. In native programs american fuzzy lop compiler wrapper (afl-gcc, afl-clang, afl-clang-fast) adds the necessary instrumentation and the connection to afl-fuzz. Python-afl is, however, designed in such way that it doesn’t try to wrap the whole program, but requires you to create a wrapper module that initializes fuzzing.

The most simple way to wrap a Python program with python-afl is to initialize python-afl and then run the program (assuming that

main()function exists):import afl afl.init() import fuzzable_module fuzzable_module.main()This script, saved to

fuzz-wrapper.py, can be then run with py-afl-fuzz command that wraps afl-fuzz for Python programs:py-afl-fuzz -m 400 -i initial-inputs/ -o fuzzing-results/ -- \ python fuzz-wrapper.py @@More details about these command line switches can be found from AFL readme file. This then brings out the famous american fuzzy lop status screen, but now for Python programs:

Next sections will explain in more details how to make fuzzing these programs more efficient and what pitfalls there could be in Python programs from fuzzing efficiency point of view.

Afl-fuzz modes and their python-afl equivalents

Generally afl-fuzz provides 4 fuzzing modes that differ in how the program execution between different fuzzing inputs behaves:

- Dumb mode that just executes the program by doing

fork()andexecv(). This is the slowest mode that does not rely on any fancy tricks to speed up program execution and also does not provide any insights how the program behaves with different inputs. - Basic fork server mode where the fuzzed binary does all the initialization steps that happen before calling the

main()function and then program is repeatedly forked from that point on. This also includes instrumentation that is compiled in to the program so there already is some insight on what is happening inside the program when a specific input is processed. There exists QEMU mode for afl-fuzz that technically enables fork server mode for uninstrumented binaries, but with some performance penalty. - Deferred instrumentation that works in similar fashion as the basic fork server mode. Instead forking just before calling

main()function, this enables to move the fork point further down the line and enables heavy program initialization steps to be avoided if they can be executed independently of the input. - Persistent mode where the fuzzable part of the program is repeatedly executed without resetting the program memory every time the program is called. This only works in practice if the program does not have a modifiable global state that can not be reset to the previous state.

Afl-fuzz generates new inputs and analyzes the program execution results roughly at the same speed regardless of the mode. So these modes are in the order of efficiency in a sense that how much overhead there is for fuzzing one input. They are also in the order of complexity on how easy they are to integrate into an existing program that has not been made to be fuzzed. Especially as the fastest modes require clang to be available as a compiler and the fuzzable program needs to be able to be compiled and linked with it.

Python-afl, fortunately, provides equivalent modes without having to use special tools. These are also very fast to try out, as you don’t need to compile Python programs from scratch.

The dumb mode would be just equivalent of running Python interpreter directly with afl-fuzz without any instrumentation, so we will skip over it. The more interesting part is to use the deferred instrumentation. The code in the introductory section called

afl.init()before the fuzzable module was imported. This is the most safe approach, as the fuzz target might do something with the input at import time. But more realistically, Python programs generally only callimportstatements, possibly conditionally, during the start-up and don’t handle any user provided data yet. So in this case, we can do imports first and move theafl.init()function just before where the actual work happens:import afl, fuzzable_module afl.init() fuzzable_module.main()We can gain some speed-ups with this by calling

os._exit()function instead of letting Python to exit in the usual fashion where all the destructors and other functions that are called at exit:import afl, fuzzable_module, os afl.init() fuzzable_module.main() os._exit(0)Previous examples assume that the input file generated by the fuzzer comes as the first parameter on the command line. This is quite a good assumption, as many data processing modules for Python include a command line interface where they read and process files given on the command line. But if we can directly call the data processing function, we can instead use the standard input to feed the data:

import afl, fuzzable_module, os, sys afl.init() fuzzable_module.process_data(sys.stdin) os._exit(0)With Python 3 comes additional complexity. Python 3 processes the standard input using the encoding specified in the environment. Often in Unix environments it is UTF-8. As afl-fuzz mostly does bit manipulation, input is going to end up with broken UTF-8 data and results in exception when reading from the standard input file object. To work around this, you can use

sys.stdin.bufferinstead ofsys.stdinin Python 3 based programs. Or create a shim that always results in raw bytes:import afl, fuzzable_module, os, sys try: # Python 3: stdin_compat = sys.stdin.buffer except AttributeError: # There is no buffer attribute in Python 2: stdin_compat = sys.stdin afl.init() fuzzable_module.process_data(stdin_compat) os._exit(0)The fastest persistent mode requires that the program should not have a global state where the previous program execution affects the next one. There unfortunately is a surprising amount of global state in Python programs. It is not that uncommon to initialize some specific variables only during the program execution and then re-use the results later. This usually is harmless, but negatively affects the program stability that afl-fuzz is going to show in its status screen.

Persistent mode code for a fuzzable program could look like following including the learnings from the deferred instrumentation and its speedups:

import afl, fuzzable_module, os try: # Python 3: stdin_compat = sys.stdin.buffer except AttributeError: # There is no buffer attribute in Python 2: stdin_compat = sys.stdin while afl.loop(10000): fuzzable_module.process_data(stdin_compat) os._exit(0)Persistent mode stability

This section has been added 6 months after writing the original article when Jakub Wilk pointed out potential stability issues with the original persistent mode code example on OS X and FreeBSD.

Some systems where Python runs implement file manipulation by using buffered functions. The most prominent operating system where afl-fuzz runs that uses buffered I/O in Python is OS X/macOS and using the persistent mode example in the previous section does not work in Python 2 on these systems. It shows up as a low stability percentage in afl-fuzz user interface that means that the same input from afl-fuzz perspective leads program taking different paths between subsequent executions, as the input read by the program is not the same as what afl-fuzz has provided.

Buffered reads from a file work by calling low level file reading system calls with a moderately sized input buffer. This input buffer then makes sure that if we do a lot of small file system reads, like reading frame based data formats frame by frame, they will not result in as many system calls as they would result without buffering. This generally increases program performance, as programmers can rely on file system functions to work relatively fast even with non-optimal access patterns.

You need to set the stream position to the beginning when using persistent mode with afl-fuzz on systems that use buffered I/O and you are reading from the standard input. All these examples read data from the standard input, as it is generally more efficient than opening a new file for each fuzzing iteration. Rewinding the stream position to the beginning by using file.seek(0) method.

Unfortunately not all streams support seeking and with afl-fuzz the situation is more tricky. If you execute the program normally and read from

sys.stdin, by default the standard input does not support seeking. But when you run it through afl-fuzz, the standard input is in reality a file descriptor that is backed up by a file on a file system. And this file supports seeking. So you need some extra code to detect if the standard input supports seeking or not:import afl, fuzzable_module, os try: # Python 3: stdin_compat = sys.stdin.buffer except AttributeError: # There is no buffer attribute in Python 2: stdin_compat = sys.stdin try: # Figure out if the standard input supports seeking or not: stdin_compat.seek(0) def rewind(stream): stream.seek(0) # In Python 2 and 3 seek failure exception differs: except (IOError, OSError): # Do nothing if we can not seek the stream: def rewind(stream): pass while afl.loop(10000): fuzzable_module.process_data(stdin_compat) rewind(stdin_compat) os._exit(0)The effect of adding standard input rewinding more than doubles the fuzzing overhead for the persistent mode on the Debian GNU/Linux system that I have used to do these benchmarks. On OS X the effect is less than 10 % overhead increase for Python 3 and is required for Python 2 to even work with afl-fuzz on OS X.

Benchmarking different afl-fuzz modes

I wanted to measure how these different afl-fuzz modes behave with Python. So I created a small fuzz target whose main algorithm does some conditional computation based on the input data and prints out the result:

def fuzz_one(stdin, values): data = stdin.read(128) total = 0 for key in data: # This only includes lowercase ASCII letters: if key not in values: continue value = values[key] if value % 5 == 0: total += value * 5 total += ord(key) elif value % 3 == 0: total += value * 3 total += ord(key) elif value % 2 == 0: total += value * 2 total += ord(key) else: total += value + ord(key) print(total)This is just to exercise the fuzzer a little bit more than a trivial function that does nothing would do. I also created an equivalent fuzz target in C++ to give numbers to compare what kind of an overhead different fuzzing modes incur for both Python and native applications. The approximate results are summarized in table 1. The actual scripts used to generate this data are available from following links: measure-times.sh, target-simple.template.py, and target-simple.cpp.

Python 2 Python 3 Native dumb mode 110/s 47/s 1200/s pre-init 130/s 46/s 5800/s deferred 560/s 260/s 6800/s quick exit 2700/s 2100/s 8700/s rewinding persistent mode 5800/s 5900/s - persistent mode 17000/s 15000/s 44000/s Table 1: afl-fuzz benchmarks for various fuzzing modes for Python 2, Python 3, and for C++ versions of the example fuzz target. What these results show, it is possible to make fuzzable program in Python in such way that the fuzzing start-up overhead is only three to four times larger than for a native one with afl-fuzz. This is an excellent result considering that generally algorithms implemented in Python can be considered 10-100 times slower than ones implemented in C family languages. But if you want to use Python for performance critical tasks, you are anyways using Cython or write performance critical parts in C.

There is a clear performance difference between Python 2.7.14 and Python 3.6.4 especially when all start-up and exit optimization tricks are not in use. This difference is also visible in Python start-up benchmarks at speed.python.org. This difference gets smaller when the persistent mode is used as Python executable is not shut down immediately after processing one input. What can also help Python 3 in the persistent fuzzing mode is the fact that the tracing function that python-afl sets with sys.settrace() is called only half as often with Python 3 is it is called with Python 2 for this fuzz target.

More speed for repeated Python executions

Python enables imports and other type of code loading at any phase of the program execution. This makes it quite possible that program has not fully loaded before it is executed for the first time. This is usually used as a configuration mechanism to configure the program based on the runtime environment and other type of configuration. It also provides the possibility to write plugins, similarly to what dynamically loaded libraries provide.

You can see from table 1 how the fuzzing overhead decreases a lot when the fuzzing start point moved after

importstatements for this simple example program. So when I was fuzzing an old version of flake8, I tried to see if it had any hidden configuration statements that would execute and cache their results for repeated calls. And it did!Initially I used following type of fuzzing wrapper for flake8:

import afl, sys, flake8.run afl.init() flake8.run.check_code(sys.stdin.read())It is basically a simple wrapper that imports all what it needs and then fuzzes what is fed from the standard input to the program. But the performance of this was horrible, around 15 executions/second. So I tried to see what happens when I change the code a little bit by calling the

flake8.run.check_code()function with an empty string before setting the fuzzing starting point:import afl, sys, flake8.run # Make sure that the hidden runtime configuration is executed: flake8.run.check_code("") afl.init() flake8.run.check_code(sys.stdin.read())This doubled the execution speed to around 30 executions/second. It is still quite slow for the small inputs that afl-fuzz initially creates, but an improvement nonetheless. I looked at what flake8 does when it is executed and a following line popped up:

from pkg_resources import iter_entry_pointsThere basically is a hidden

importstatement in the execution path whose result is cached after the first time it is encountered. Also thispkg_resources.iter_entry_points()function is used to configure the program at runtime and that also adds some extra overhead to the process.Flake8 also by default tries to execute checks in parallel with multiprocessing module. This might be a good idea when you have multiple files to verify at once, but during fuzzing it just adds unneeded overhead. Also the fact that it starts a new process makes the fuzzer lose all information about what is happening in the subprocess. Fortunately in flake8 it was possible to override the detected multiprocessing support by just setting one variable into

Falseand then flake8 will act as there is no multiprocessing support. This increased the average fuzzing speed of flake8 by threefold.The final speedup with flake8 came when looking at how flake8 is constructed. It is basically a wrapper around mccabe, pycodestyle, and pyflakes packages. So rather than fuzz flake8, it is much more productive to create a fuzz target for each one of those packages individually. I did this for pycodestyle and ended up finally executing it with around 420 executions/second for trivial data and around 200 executions/second for more realistic. So basic recommendations on how to fuzz native programs also apply for Python.

Monkey patching around fuzzing barriers

As american fuzzy lop does not know almost anything about the input, it can encounter various impediments (see section 13 from afl’s README.txt) when the file format includes any values that depend on the previously encountered data. This is especially problematic with checksums and is also an issue with other mutation based fuzzers. To work around this the C world, you can generally use C preprocessor to turn off checksum verification when such thing is encountered. This also applies for all other types of barriers that might skew fuzzing results, like random number usage.

Unfortunately Python does not have preprocessor support by default, so this type of conditional compiling is out of the question. Fortunately Python provides the possibility to do monkey patching where you can replace functions or methods at runtime. So to make a library more fuzzer friendly, you can monkey patch all functions related to data verification to always return

True, or return some constant value when checksums are considered.I used this approach to fuzz python-evxt 0.6.1 library. Python-evxt is a library to parse Windows event log files and is one of the first hits when you search Python Package Index with “pure python parser” keyword. The file format includes CRC32 checksum that will prevent the fuzzer from being able to meaningfully modify the input file, as almost all modifications will create an incorrect checksum in the file.

To monkey patch around this issue, I searched the source code for all functions that potentially have anything to do with checksum generation and made them always to return a constant value:

import Evtx.Evtx checksum_patches = ( (Evtx.Evtx.ChunkHeader, "calculate_header_checksum"), (Evtx.Evtx.ChunkHeader, "header_checksum"), (Evtx.Evtx.ChunkHeader, "calculate_data_checksum"), (Evtx.Evtx.ChunkHeader, "data_checksum"), (Evtx.Evtx.FileHeader, "checksum"), (Evtx.Evtx.FileHeader, "calculate_checksum"), ) for class_obj, method_name in checksum_patches: setattr(class_obj, method_name, lambda *args, **kw: 0)The

checksum_patchesvariable holds all the functions that you need to overwrite to ignore checksums. You can usesetattr()to overwrite these methods on class level with an anonymous functionlambda *args, **kw: 0that always returns0and takes any arguments. Taking any number of arguments in any fashion is enabled with*argsand**kwand this syntax is explained in keyword arguments section on Python’s control flow tools manual.Takeaways

I was impressed on how low fuzzing overhead Python programs can have when compared to C family languages. When looking at how python-afl is implemented, it becomes quite clear that the deferred mode of afl-fuzz has a great part in this where the whole Python environment and program initialization is skipped between different fuzzing runs.

Making a Python module fuzzable was also more easy than in C family languages. Although american fuzzy lop is already the easiest fuzzing engine to get started with, the fact that it uses clang to do the more advanced tricks often gives headaches when trying to get software to compile. The fact that I did not have to use any modified Python interpreter to get started but only had to

import aflmodule made me to realize how many steps I skipped that I normally have to do when using american fuzzy lop on new systems.Thanks to Python’s dynamic execution and monkey patching capabilities I could also try out fuzz target creation with external libraries without having to actually modify the original code. Especially selecting some specific functions to fuzz and overriding checksum generation would generally require nasty software patches with C family languages. Especially if there is

main()function to override.It was also nice to realize that the

os._exit(0)equivalent in standard C library function call_Exit(0)could help make light native fuzz targets even faster. The process cleanup in this relatively trivial C++ program adds almost 30% overhead for repeated program execution. Although it will likely break any sanitizer that does any verification work during the exit, like searching for dynamically allocated memory that was not freed. - Dumb mode that just executes the program by doing

-

Nanorepositories

I recently encountered a microservice antipattern called nanoservice that is described in following manner:

Nanoservice is an Anti-pattern where a service is too fine grained. Nanoservice is a service whose overhead (communications, maintenance etc.) out-weights its utility.

I have encountered a lot of similar situations with source code repositories where different parts of a program or a system that was shipped as a single entity were divided into smaller repositories. The code in these repositories was not used by anything else than the product (the unit of release) that the code was part of.

In the most extreme case there were thousands of smaller repositories. Many of those repositories were just holding less than 10 files in a deep directory hierarchy implementing some tiny functionality of the whole. Plus some boilerplate functionality for building and repository management that could just have been a couple of extra lines in a build system for a larger entity.

In more common cases, one product consists of dozens of smaller repositories where one or two repositories get over 90% of the whole weekly commit traffic and other repositories just get one commit here and there. Or that there are multiple interlinked repositories (see an example in How many Git repos article) that depend on each other and very often all of them need to go through the same interface changes.

Sometimes there also is a situation that all the work is done in smaller repositories and then there is one superproject[1, 2, 3] that is automatically updated when a commit happens in any of its child projects. So basically you have one big repository that just consists of pointers to smaller repositories and has one unneeded layer of indirection. Also instead of making one commit that would reveal integration problems immediately, you now need to have multiple commits to reveal these issues. With some extra unneeded delay.

I would suggest calling these types of repositories nanorepositories. A nanorepository is a repository that holds a subsystem that is too limited to stand on its own and needs a bigger system to be part of. This bigger system usually is also the only system that is using this nanorepository. The nanorepository is also owned by the same organization as the larger entity it’s part of. Therefore it doesn’t give any advantages, for example, in access control. Nanorepositories can hold just couple of files, but they can also be relatively large applications that are, however, tightly coupled with the system they are part of.

Downsides of premature repository division

Nanorepositories are a case of premature optimization for code sharing where there is no real need for it. There are articles (Advantages of monolithic version control, On Monolithic Repositories) and presentations (Why Google Stores Billions of Lines of Code in a Single Repository, F8 2015 - Big Code: Developer Infrastructure at Facebook’s Scale) talking about the advantages of monolithic repositories, but those advantages can be hard to grasp without knowing the disadvantages of the other end.

I’ll list some issues that I have encountered when working with independent repositories. These all lead to a situation where developers need to spend extra time in repository management that could be avoided by grouping all software components that form the final product into one repository.

Expensive interface changes

Interface changes between components become expensive. You also need cumbersome interface deprecation policies and a way to support the old and new interface versions between repositories until the change has propagated everywhere. It can take months or years to ensure that all interface users have done the intended interface change. And if you don’t have a good search at your disposal, you still can’t be sure about it before you really remove the old interface.

With separate repositories it’s often the case that you can’t easily search where the interface you are deprecating is used at. This means that you don’t beforehand know what is actually depending on the interface. There naturally are search engines that span over multiple repositories, but they very rarely beat a simple

grep -r(orgit grep) command whose output you can further filter with simple command line tools. Especially if there are hundreds of small repositories that you need to include in your search.Ignore file duplication

Often you need to add ignore files (

.gitignore,.hgignore, etc…) to prevent junk going in to the repository by accident. Situations that can generate junk next to your code can be for example:- In-source build (versus separate build directories) generated files (

*.a,*.o,*.exe,*.class,*.jar…). - Using any editor that creates backup and other files next to the file you are editing (

*~,*.swp,*.bak…). - Using interpreted languages, like Python, whose default implementation byte compile the scripts for faster start-up (

*.pyc,*.pyo…). - Using integrated development environments that require their own project directories.

All these generic ignore rules need to be included in every project in addition to project specific ignores. Other possibility is forcing these ignore rules on developers themselves instead of taking care of them centrally. In case of nanorepositories there likely is just one or two languages used per repository, so the amount of ignore rules likely depends on the development environment that the developers work with. But it’s still needless duplication when you could get by without.

Re-inventing inefficient build system rules

Small repositories lead into having to reinvent build system rules for every repository from scratch if you want to test and build your component in isolation. Or doing a lot of code duplication or including references to a repository including common build rules. This also applies for test runners, as different levels of testing for different languages usually have their own test runners. Sometimes multiple test runners per language, that all have some non-default options that provide various advantages in test result reporting.

Modern build systems like, ninja and Bazel, usually work on knowing the whole build graph of the system that they are trying to build. This makes it more easy to discover dependencies that only rebuild parts that are necessary to rebuild. Building every repository independently from each other leads into recursive build systems that treat their inputs as black boxes (Bitbake, npm, Maven, Make…). Changes in these black boxes are either communicated with version number changes or always rebuilding the component in question. This leads into a wasteful process and resource usage when compared to trunk based development of monolithic repositories.

Overly complicated continuous integration machinery

One of the defining principles of modern software development is having a working continuous integration system in place. This ensures that independent changes also work when they leave developer’s machine and also work when they are integrated with the rest of the product that the change is part of. And this is done multiple times every day. This, combined with trunk based development, keeps integration issues short (minutes to days) and avoids many-month release freezes compared to branched or forked development methods.

Nanorepositories likely end up in a repository specific checks in the continuous integration machinery that only verify that the component in the repository itself works well. And if this continuous integration machinery has an automatic per repository check job generation, it likely needs to have an entry point (like

make testortest.shscript) to execute those tests. And the same applies for compilation. Not to mention the extra work when trying to compile and test against different systems and runtime instrumentation (like AddressSanitizer).When the component finally gets integrated with everything else and the system breaks, figuring out the exact commit where the breakage happens (besides the integrating one) can be really painful. This is because it is easily possible to have dozens to thousands of commits between component releases. See a physical world example where components work perfectly together, but fail when integrated. And its hotfix.

A case for small repositories

Nanorepositories should not be confused with small independent repositories, as not everything needs to aim to be a part of a bigger product. A very common reason for small repositories is the combination of ownership management with shareable components. Most open source projects are such that they are owned by a certain people, or an organization, and it’s just not a good case for them to be part of anything bigger. Especially if they provide independent components that really are used by multiple external entities. Same downsides, however, generally apply to a collection of open source projects as to products consisting of multiple repositories.

- In-source build (versus separate build directories) generated files (

-

Static code analysis and compiler warnings

Compiler generated warnings are one form of static code analysis that provides a codified form of certain types of beneficial programming practices. Nowadays modern compilers used to compile C family languages (C, C++, and Objective-C) provide hundreds of different warnings whose usefulness varies depending on project and its aims.

In this article I will examine what level of issues compiler warnings can find, what is the cost of enabling warnings and analyze compiler warning flag lists for both clang and GCC compilers.

Levels of static code analysis

Compiling C family languages usually involves preprocessor, compiler, assembler, and a linker. This also leads to situation that static code analysis can be done in various phases of program construction. These generally are:

- Analysis on plain source files.

- Analysis on preprocesses source files.

- Analysis on compilation unit level.

- Link-time analysis.

This multi-stage program construction results in difficulties for tools that are not called with the exact same arguments providing information about preprocessor definitions, and include and library directories. For example tools like splint, Cppcheck, and many editor front-ends work outside the build system and can result in false warnings because they can not see inside some macro definitions that were not included in the simple static analysis setup. This becomes an issue with larger projects that do not necessarily have the most straightforward build setups and the most trivial header file inclusion policies. This does not mean that such tools are useless, but they will result in false positive warnings that can be really annoying unless they are silenced or ignored in some way.

Analysis on preprocessed source files already provides pretty accurate picture of what kind of issues there can be in the program, but it necessarily is not enough. In the compilation phase compilers constantly transform the program into new, functionally equivalent, forms during optimization phases that can even result in unexpected code removal that is not necessarily trivial to notice. Compilation phase also gives more opportunities for target platform specific static code analysis. For example pipeline stalls or value overflows due to incorrect assumptions on data type sizes can usually be noticed only after the target platform is known.

Final phase in program construction, that provides options for static analysis, is the linking phase. In the linking phase linker takes care that all the functions and global variables that the program calls come from somewhere and that there are no conflicting duplicate names defined. This should also enable some automatic detection capabilities for memory leaks and such that come from calling functions defined in different compilation units. I’m not sure if any freely available static analyzer does this.

Compiler warning flags

Compiler warning flags are one way to do static code analysis that cover all possible phases of program construction. This assumes that the compiler is involved in all phases of program construction. And they usually are, as in all phases from preprocessing to linking compiler front-end is used as a wrapper to all the tools that do the actual hard work.

Warning flags and compilation time

Using static code analysis in form of compiler warnings incurs some penalty, as they need to execute some extra code in addition to normal code related to compilation. To measure the penalty and to contrast it with some more advanced static analysis tools,

I did some benchmarks by compiling Cppcheck 1.73 and FFTW 3.3.4 with clang 3.8, GCC 6.1, and Infer 0.8.1 by using

-O3optimization level. Cppcheck is a program mainly written in C++ and FFTW is mainly written in C. Infer has some experimental checks for C++ enabled with--cxxcommand line option, so I ran Infer twice for Cppcheck, with and without C++ checks. Clang had all warnings enabled-Weverythingand GCC had all warning options that did not require any special values. This resulted in following minimum execution times of 3 runs:Compiler Program No warnings All warnings clang Cppcheck 59.3 s 1 min 1.1 s (+ 3.0 %) GCC Cppcheck 1 min 32.7 s 1 min 38.8 s (+ 6.6 %) Infer Cppcheck - 17 min 50 s (18x slower) Infer --cxxCppcheck - 1 h 36 min (97x slower) clang FFTW 40.5 s 40.9 s (+ 1 %) GCC FFTW 42.7 s 58.1 s (+ 36 %) Infer FFTW - 4 min 43 s (10x slower) We can see that for clang and GCC the extra processing time added even by all warnings flags is pretty small compared to all the other compilation and optimization steps for a C++ application (Cppcheck). But for mostly C based application (FFTW) GCC gets surprisingly heavy, although build times still remain within the same order of magnitude.

If we then compare the time that a more heavy static code analyzer takes, these compiler warnings are extremely cheap way to add static code analysis. They may not catch all the same bugs as these more advanced methods do, but they do offer a cheap way to avoid the basic mistakes.

Warning flag lists

I have created a project that can automatically parse compiler warning flags from command line option definition files in clang and GCC. This came partially from a necessity and partially from curiosity to examine what kind of options clang and GCC provide in easy to digest format. Although both compiler provide some kind of lists of warning flags as part of their documentation, they are pretty cumbersome to go through when the main interest is first figure what there is available and then just look at the details.

Warning options and deprecation

Different compilers have different policies about backwards compatibility and deprecation. When looking at how warning options have evolved, GCC has not removed between versions 3.4 and 6.1 a single switch, it has just switched them to do nothing (

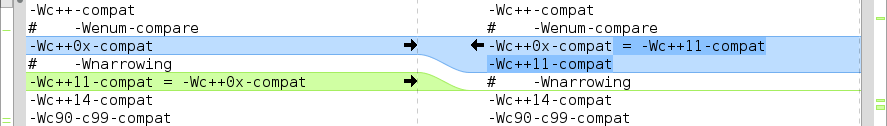

-Wimport,-Wunreachable-code, and-Wmudflapswitches). Clang on the other hand has removed multiple switches between versions and for example there is no references to-Wcxx98-cxx11-compatin the current codebase even if clang 3.3 had such switch.Examining differences visually

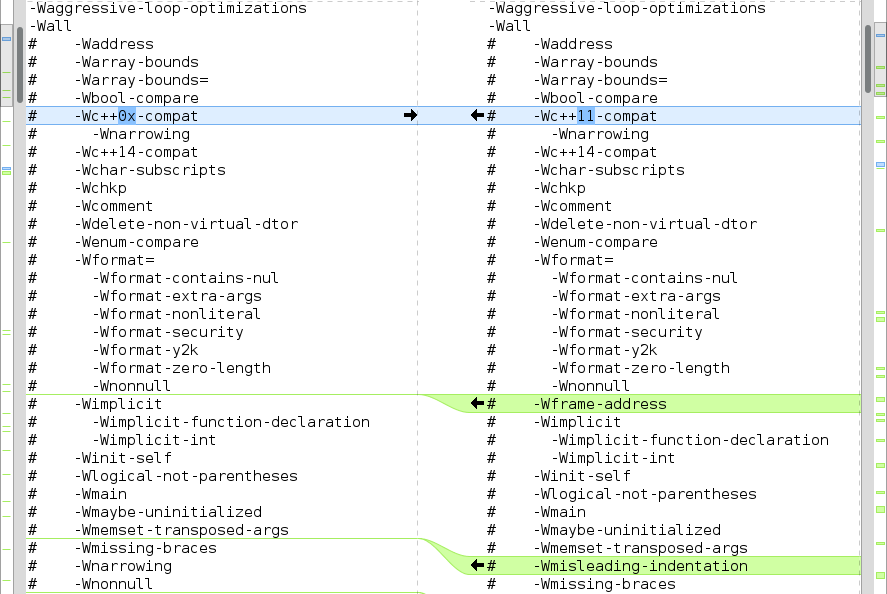

Generating large purely textual differences between different files becomes quite cumbersome quite soon if you want to do anything more complicated than a simple difference of unique command line options between two subsequent versions. For example if we look at figure 1 that shows what other warnings

-Wallflag enables in GCC 6 when compared to GCC 5. We can see that there are quite many extra warnings added to-Wallswitch so newer compiler versions provide extra analysis capabilities even without adding all the new options individually.

Figure 1: Meld showing differences what flags

-Wallenables between GCC 5 and 6.From figure 2 we can also see that GCC 6 uses

-Wc++11-compatas the default warning flag indicating differences between ISO C++ 1998 and ISO C++ 2011 for constructs that have the same name instead of-Wc++0x-compat, that refers to a draft standard. So GCC has basically deprecated-Wc++0x-compatswitch in favor of a switch that refers to the actual standard.Suggestions for usable warning options

I won’t be giving any specific suggestions here for warning flags, as there seem to be new options for each subsequent compiler release. A good place to start is NASA’s JPL Institutional Coding Standard for the C Programming Language that includes a very short list of rudimentary warning flags for GCC. It also includes a short list of coding standards of which each one would have prevented a mission failure for NASA. SEI CERT coding standards for secure coding also provide various automatically generated lists for clang warning flags and GCC warning flags based on the issues that these standards take into account.

And finally, check out the warning flag lists for clang and GCC and make your own combinations that bring the most benefit for whatever you are working with. Not all of them are appropriate for your project and some of them may be even working against the useful development patterns that you have.

Cautionary tales about compiler warnings flags

Even though it might sound like a good idea to rush and fix all the issues that these new compiler warning flags uncover, it might actually cause some new bugs to pop up. Specifically SQLite database engine has had its own take on compiler warnings and their fixing and they have concluded that fixing compiler warnings actually has produced some extra bugs that would not have come into light if there would have not been tries to fix compiler warnings.

I have also had my own take on compiler warning fixes and sometimes I have screwed up and messed up with a perfectly working code while fixing a misleading warning. But generally my own experience has lead to more fixes than what there have been bugs. And the coolest thing is, that having these warnings enabled as the standard development process prevent some bugs from ever creeping up to the application in the first place.

subscribe via RSS