Articles

-

Being a good CPU neighbor

Very often computational tasks are roughly divided into real-time and batch processing tasks. Sometimes you might want to run some tasks that take a large amount of computation resources, get the result as fast as possible, but you don’t really care exactly when you get the result out.

In larger organizations there are shared computers that usually have a lot of CPU power and memory available and that are mostly idle. But they are also used by other users that will be quite annoyed if they suffer from long delays to key presses or from similar issues because your batch processing tasks take all the resources that are available away from the system. Fortunately *nix systems provide different mechanisms available to non-root users to avoid these kind of issues.

These issue can also raise in situations where you have a system that can only allocate static resource requirements to tasks, like in Jenkins. As an example, you might have some test jobs that need to finish within certain time limit mixed with compilation jobs that should finish as soon as possible, but that can yield some resources to these higher priority test jobs, as they don’t have as strict time limitation requirements. And you usually don’t want to limit the resources that compilation jobs can use, if they are run on otherwise idle machine.

Here I’m specifically focusing on process priority settings and other CPU scheduling settings provided by Linux, as it currently happens to be probably the most used operating system kernel for multi-user systems. These settings affect how much CPU time a process gets relative to other processes and are especially useful on shared overloaded systems where there are more processes running than what there are CPU cores available.

Different process scheduling priorities in Linux

Linux and POSIX interfaces provide different scheduling policies that define how Linux scheduler allocates CPU time to a process. There are two main scheduling policies for processes: real-time priority policies and normal scheduling policies. Real-time policies are usually accessible only to root user and are not the point of interest of here, as they can not usually be used by normal users on shared systems.

At the time of the writing this article there are three normal scheduling policies available to normal users for process priorities:

SCHED_OTHER: the default scheduling policy in Linux with the default dynamic priority of 0.SCHED_BATCH: policy for scheduling batch processes. This schedules processes in similar fashion asSCHED_OTHERand is affected by the dynamic priority of a process. This makes the scheduler assume that the process is CPU intensive and makes it harder to wake up from a sleep.SCHED_IDLE: the lowest priority scheduling policy. Not affected by dynamic priorities. This equals to nice level priority of 20.

The standard *nix way to change process priorities is to use

nicecommand. By default in Linux, process can get nice values of -20–19 where the default nice value for a process is 0. When process is started withnicecommand, the nice value will be- Values 0–19 are available to a normal user and -20–-1 are available to the root user. The lowest priority nice value of 19 gives process 5% of CPU time with the default scheduler in Linux.

chrtcommand can be used to give a process access toSCHED_BATCHandSCHED_IDLEscheduling policies.chrtcan be used with combination ofnicecommand to lower the priority ofSCHED_BATCHscheduling policy. And usingSCHED_IDLE(= nice level 20) policy should give around 80% of the CPU time that nice level of 19 has, as nice level weights increase the process priority by 1.25 compared to lower priority level.Benchmarking with different competing workloads

I wanted to benchmark the effect of different scheduling policies on a work done by a specific benchmark program. I used a system with Linux 3.17.0 kernel with Intel Core i7-3770K CPU at 3.5 GHz processor with hyperthreading and frequency scaling turned on and 32 gigabytes of RAM. I didn’t manage to make the CPU frequency constant, so results vary a little between runs. I used John the Ripper’s bcrypt benchmark started with following command as the test program for useful work:

$ /usr/sbin/john --format=bcrypt -testI benchmarked 1 and 7 instances of John the Ripper for 9 iterations (9 * 5 = 45 seconds) with various amounts of non-benchmarking processes running at the same time. 1 benchmarking process should by default not have any cache contention resulting from other benchmarking processes happening and 7 benchmarking processes saturate all but 1 logical CPU core and can have cache and computational unit contention with each other.

Non-bechmarking processes were divided into different categories and there were 0–7 times CPU cores instances of them started to make them fight for CPU time with benchmarking processes. The results of running different amounts of non-benchmarking processes with different scheduling policies can be found from tables 1, 2, 3, and 4. Different scheduling policies visible in those tables are:

- default: default scheduling policy. Command started without any wrappers. Corresponds to

SCHED_OTHERwith nice value of 0. - chrt-batch: the default

SCHED_BATCHscheduling policy. Command started withchrt --batch 0prefix. - nice 10:

SCHED_OTHERscheduling policy with the default nice value of 10. Command started withniceprefix. - nice 19:

SCHED_OTHERscheduling policy with nice value of 19. Command started withnice -n 19prefix. This should theoretically take around 5 % of CPU time out from the useful work. - nice 19 batch:

SCHED_BATCHscheduling policy with nice value of 19. Command started withnice -n 19 chrt --batch 0prefix. - sched idle:

SCHED_IDLEscheduling policy. Command started withchrt --idle 0prefix. This should theoretically take around 80 % of CPU time compared to nice level 19 out from useful work.

The results in those tables reflect the relative average percentage of work done compared to the situation when there are no additional processes disturbing the benchmark. These only show the average value and do not show, for example, variance that could be useful to determine how close those values are of each other. You can download get the raw benchmarking data and the source code to generate and analyze this data from following links:

CPU looper

CPU looper applications consists purely of an application that causes CPU load. Its main purpose is just to test the scheduling policies without forcing CPU cache misses. Of course there will be context switches that can replace data cache entries related to process state and instruction cache entries for the process with another, but there won’t be any extra intentional cache flushing happening here.

The actual CPU looper application consists code shown in figure 1 compiled without any optimizations:

int main() { while (1) {} return 0; }Figure 1: source code for CPU looper program. The results for starting 0–7 times CPU cores of CPU looper processes with the default priority and then running benchmark with 1 or 7 cores can be found from tables 1 and 2.

1 worker 0 1 2 3 4 5 6 7 default 100 64.6 36.4 25.5 18.2 15.4 12.5 11.0 nice 0 vs. sched batch 100 66.5 37.0 24.0 16.3 14.8 12.2 10.9 nice 0 vs. nice 10 100 93.3 90.2 82.7 75.4 75.2 75.0 74.9 nice 0 vs. nice 19 100 92.9 90.4 91.0 91.8 89.8 89.4 90.9 nice 0 vs. nice 19 batch 100 94.0 89.2 91.4 89.8 86.4 89.4 90.7 nice 0 vs. sched idle 100 95.0 91.3 92.2 92.0 92.6 91.0 92.4 — nice 10 vs. nice 19 100 80.0 74.7 68.0 67.7 67.5 64.5 60.7 nice 10 vs. nice 19 batch 100 92.5 83.8 75.9 75.0 74.3 74.1 69.8 nice 10 vs. sched idle 100 89.8 89.7 90.9 89.0 87.8 87.4 87.2 — nice 19 vs. sched idle 100 77.9 69.7 66.9 66.3 64.6 63.4 58.1 Table 1: the relative percentage of average work done for one benchmarking worker when there are 0–7 times the logical CPU cores of non-benchmarking CPU hogging jobs running with different scheduling policies. 7 workers 0 1 2 3 4 5 6 7 default 100 49.8 33.6 24.6 19.4 16.4 13.8 12.2 nice 0 vs. sched batch 100 51.1 34.0 25.1 20.0 16.6 14.2 12.4 nice 0 vs. nice 10 100 92.9 87.2 80.1 75.0 71.0 66.3 61.1 nice 0 vs. nice 19 100 94.1 94.9 95.4 95.2 96.2 95.7 96.0 nice 0 vs. nice 19 batch 100 95.7 95.2 95.7 95.5 95.2 94.6 95.4 nice 0 vs. sched idle 100 96.3 95.7 96.2 95.6 96.7 95.4 96.7 — nice 10 vs. nice 19 100 93.6 84.9 78.0 71.4 65.7 60.4 56.2 nice 10 vs. nice 19 batch 100 92.4 84.1 77.6 69.9 65.6 60.7 56.4 nice 10 vs. sched idle 100 95.5 94.9 94.5 93.9 94.3 93.7 91.9 — nice 19 vs. sched idle 100 91.4 80.9 71.4 64.3 58.3 52.5 48.5 Table 2: the relative percentage of average work done for seven benchmarking workers when there are 0–7 times the logical CPU cores of non-benchmarking CPU hogging jobs running with different scheduling policies. Tables 1 and 2 show that the effect is not consistent between different scheduling policies and when there is 1 benchmarking worker running it suffers more from the lower priority processes than what happens when there are 7 benchmarking workers running. But with higher priority scheduling policies for background processes the average amount of work done for 1 process remains higher for light loads than with 7 worker processes. These discrepancies can be probably explained by how hyperthreading works by sharing the same physical CPU cores and by caching issues.

Memory looper

Processors nowadays have multiple levels of cache and no cache isolation and memory access from one core can wreak havoc for other cores with just moving data from and to memory. So I wanted to see what happens with different scheduling policies when running multiple instances of a simple program that run on the background when John the Ripper is trying to do some real work.

#include <stdlib.h> int main() { // 2 * 20 megabytes should be enough to spill all caches. const size_t data_size = 20000000; const size_t items = data_size / sizeof(long); long* area_source = calloc(items, sizeof(long)); long* area_target = calloc(items, sizeof(long)); while (1) { // Just do some memory operations that access the whole memory area. for (size_t i = 0; i < items; i++) { area_source[i] = area_target[i] + 1; } for (size_t i = 0; i < items; i++) { area_target[i] ^= area_source[i]; } } return 0; }Figure 2: source code for memory bandwidth hogging program. Program shown in figure 2 is compiled without any optimizations and it basically reads one word from memory, adds 1 to it and stores the result to some other memory area and then XORs the read memory area with the written memory area. So it basically does not do anything useful, but reads and writes a lot of data into memory every time the program gets some execution time.

Tables 3 and 4 show the relative effect on John the Ripper benchmarking program when there are various amounts of the program shown in figure 2 running at the same time. If you compare these numbers to values shown in tables 1 and 2 a program that only uses CPU cycles is running, the numbers for useful work can be in some cases around 10 percentage points lower. So there is apparently some cache contention ongoing with this benchmarking program and the effect of lower priority scheduling policies is not the same that could be theoretically expected just from the allocated time slices.

1 worker 0 1 2 3 4 5 6 7 default priority 100 61.7 32.6 21.8 16.6 13.3 11.2 9.8 nice 0 vs. sched batch 100 60.2 32.6 22.7 16.2 12.9 10.9 10.2 nice 0 vs. nice 10 100 85.2 82.1 75.8 70.8 69.6 70.5 68.1 nice 0 vs. nice 19 100 85.4 83.0 79.7 81.7 78.2 81.9 84.7 nice 0 vs. nice 19 batch 100 83.8 81.2 80.5 83.4 80.4 79.4 84.3 nice 0 vs. sched idle 100 82.9 80.9 81.2 82.1 80.6 82.3 81.8 — nice 10 vs. nice 19 100 80.0 74.7 68.0 67.7 67.5 64.5 60.7 nice 10 vs. nice 19 batch 100 80.8 74.1 67.6 67.1 67.9 65.5 63.3 nice 10 vs. sched idle 100 83.9 81.6 79.2 77.0 75.9 76.8 77.1 — nice 19 vs. sched idle 100 77.9 69.7 66.9 66.3 64.6 63.4 58.1 Table 3: the relative percentage of average work done for one benchmarking worker when there are 0–7 times the logical CPU cores of non-benchmarking CPU and memory bandwidth hogging jobs running with different scheduling policies. 7 workers 0 1 2 3 4 5 6 7 default 100 48.4 32.6 24.5 19.7 16.3 14.2 12.3 nice 0 vs. sched batch 100 48.6 32.2 23.7 19.4 16.2 14.0 12.6 nice 0 vs. nice 10 100 89.3 81.5 74.6 69.2 64.6 60.4 56.3 nice 0 vs. nice 19 100 92.0 90.5 90.4 91.2 91.3 90.6 90.5 nice 0 vs. nice 19 batch 100 91.5 91.8 92.5 91.8 91.9 92.1 91.9 nice 0 vs. sched idle 100 92.1 91.9 91.9 91.5 92.0 92.4 92.1 — nice 10 vs. nice 19 100 85.4 77.3 70.4 63.3 58.3 54.2 50.3 nice 10 vs. nice 19 batch 100 86.8 77.7 70.0 63.4 58.8 54.4 50.6 nice 10 vs. sched idle 100 90.6 89.0 88.9 88.9 88.0 87.4 86.3 — nice 19 vs. sched idle 100 82.8 72.9 62.9 57.3 52.2 48.2 44.1 Table 4: the relative percentage of average work done for seven benchmarking workers when there are 0–7 times the logical CPU cores of non-benchmarking CPU and memory bandwidth hogging jobs running with different scheduling policies. Conclusions

These are just results of one specific benchmark with two specific workloads on one specific machine with 4 hyperthreaded CPU cores. They should anyways give you some kind of an idea how different CPU scheduling policies under Linux affect the load that you do not want to disturb when your machine is more or less overloaded. Clearly when you are reading and writing data from and to memory, the lower priority background process has bigger impact on the actual worker process than what the allocated time slices would predict. But, unsurprisingly, a single user can have quite a large impact on how much their long running CPU hogging processes affect rest of the machine.

I did not do any investigation how much work those processes that are supposed to disturb the benchmarking get done. In this case

SCHED_BATCHwith nice value of 19 could probably be the best scheduling policy if we want to get the most work done and at the same time avoid disturbing other users. Otherwise, it looks like theSCHED_IDLEpolicy, taken into use withchrt --idle 0command, that is promised to have the lowest impact on other processes has the lowest impact. Especially when considering processes started with lower nice values than the default one. -

C, for(), and signed integer overflow

Signed integer overflow is undefined behavior in C language. This enables compilers to do all kinds of optimization tricks that can lead to surprising and unexpected behavior between different compilers, compiler versions, and optimization levels.

I encountered something interesting when I tried different versions of GCC and clang with following piece of code:

#include <limits.h> #include <stdio.h> int main(void) { int iterations = 0; for (int i = INT_MAX - 2; i > 0; i++) { iterations++; } printf("%d\n", iterations); return 0; }When compiling this with GCC 4.7, GCC 4.9, and clang 3.4 and compilation options for x86_64 architecture, we get following output variations:

$ gcc-4.9 --std=c11 -O0 tst.c && ./a.out3 $ gcc-4.9 --std=c11 -O3 tst.c && ./a.out 2 $ clang-3.4 --std=c11 -O3 tst.c && ./a.out 4 $ gcc-4.7 --std=c11 -O3 tst.c && ./a.out asdf <infinite loop...></code>So let’s analyze these results. The loop

for (int i = INT_MAX - 2; i > 0; i++) {should only iterate3times until variableiwraps around to negative value due to two’s complement arithmetic. Therefore we would expectiterationsvariable to be3. But this only happens when optimizations are turned off. So let’s see why these outputs result in these different values. Is there some clever loop unrolling that completely breaks here or what?GCC 4.9 -O0

There is nothing really interesting going in the assembly code produced by GCC without optimizations. It looks like what you would expect from this kind of loop

for()on the lower level. So the main interest is in optimizations that different compilers do.GCC 4.9 -O3 and clang 3.4 -O3

GCC 4.9 and clang 3.4 both seem to result in similar machine code. They both optimize the

for()loop out and replace the result of it with a (different) constant. GCC 4.9 is just creating code that assigns value2to the second parameter forprintf()function (see System V Application Binary Interface AMD64 Architecture Processor Supplement section 3.2.3 about parameter passing). Similarly clang 3.4 assigns value4to the second parameter forprintf().GCC 4.9 -O3 assembler code for main()

Here we can see how value

2gets passed asiterationsvariable:Dump of assembler code for function main: 5 { 0x00000000004003f0 <+0>: sub $0x8,%rsp 6 int iterations = 0; 7 for (int i = INT_MAX - 2; i > 0; i++) { 8 iterations++; 9 } 10 printf("%d\n", iterations); 0x00000000004003f4 <+4>: mov $0x2,%esi 0x00000000004003f9 <+9>: mov $0x400594,%edi 0x00000000004003fe <+14>: xor %eax,%eax 0x0000000000400400 <+16>: callq 0x4003c0 <printf@plt> 11 return 0; 12 } 0x0000000000400405 <+21>: xor %eax,%eax 0x0000000000400407 <+23>: add $0x8,%rsp 0x000000000040040b <+27>: retqclang 3.4 -O3 assembler code for main()

Here we can see how value

4gets passed asiterationsvariable:Dump of assembler code for function main: 5 { 6 int iterations = 0; 7 for (int i = INT_MAX - 2; i > 0; i++) { 8 iterations++; 9 } 10 printf("%d\n", iterations); 0x00000000004004e0 <+0>: push %rax 0x00000000004004e1 <+1>: mov $0x400584,%edi 0x00000000004004e6 <+6>: mov $0x4,%esi 0x00000000004004eb <+11>: xor %eax,%eax 0x00000000004004ed <+13>: callq 0x4003b0 <printf@plt> 0x00000000004004f2 <+18>: xor %eax,%eax 11 return 0; 0x00000000004004f4 <+20>: pop %rdx 0x00000000004004f5 <+21>: retqGCC 4.7 -O3

GCC 4.7 has an interesting result from optimizer that is completely different that could be expected to happen. It basically replaces

main()function with just one instruction that does nothing else than jumps at the same address that it resides in and thus creates an infinite loop:Dump of assembler code for function main: 5 { 0x0000000000400400 <+0>: jmp 0x400400 <main>Conclusion

This is an excellent example of nasal demons happening when there is undefined behavior ongoing and compilers have free hands to do anything they want. Especially as GCC 4.7 produces really harmful machine code that instead of producing unexpected value as a result, it just makes the program unusable. Other compilers and other architectures could result in different behavior, but let that be something for the future investigation.

-

Shell script patterns for bash

I write a lot of shell scripts using bash to glue together existing programs and their data. I have noticed that some patterns have emerged that I very often use when writing these scripts nowadays. Let the following script to demonstrate:

#!/bin/bash set -u # Exit if undefined variable is used. set -e # Exit after first command failure. set -o pipefail # Exit if any part of the pipe fails. ITERATIONS=$1 # Notice that there are no quotes here. shift COMMAND=("$@") # Create arrays from commands. DIR=$(dirname "$(readlink -f "$0")") # Get the script directory. echo "Running '${COMMAND[*]}' in $DIR for $ITERATIONS iterations" WORKDIR=$(mktemp --directory) function cleanup() { rm -rf "$WORKDIR" } trap cleanup EXIT # Make sure that we clean up in any situation. for _ in $(seq 1 "$ITERATIONS"); do ( # Avoid the effect of "cd" command from propagating. cd "$WORKDIR" "${COMMAND[@]}" \ # Execute command from an array. | cat ) doneFail fast in case of errors

Lines

3–5are related to handling erroneous situations. If any of the situations happen that these settings have effect on, they will exit the shell script and prevent it running further. They can be combined toset -ueo pipefailas a shorthand notation.A very common mistake is to have an undefined variable, by just forgetting to set the value, or mistyping a variable name.

set -uat line3prevents these kind of issues from happening. Other very common situation in shell scripts is that some program is used incorrectly, it fails, and then rest of the shell script just continues execution. This then causes hard to notice failures later on.set -eat line4prevents these issues from happening. And because life is not easy in bash, we need to ensure that such program execution failures that are part of pipelines are also caught. This is done byset -o pipefailon line5.Clean up after yourself even in unexpected situations

It’s always a good idea to use unique temporary files or directories for anything that can be created during runtime that is not a specific target of the script. This makes sure that even if many instances of the script are executed in parallel, there won’t be any race condition issues.

mktempcommand on line14shows how to create such temporary files.Creating temporary files and directories poses a problem where they may not be deleted if the script is exited too early or if you forget to do a manual cleanup.

cleanup()function shown at lines15–18includes code that makes sure that the script cleans up after itself andtrap cleanup EXITmakes sure thatcleanup()is implicitly called in any situation where this script may exit.Executing commands

Something that is often seen in shell scripts that when you need to create a variable that has a command with arguments, you then execute the variable as it is (

$COMMAND) without using any safety mechanisms against input that can lead into unexpected results in the shell script. Lines9COMMAND=("$@")and24"${COMMAND[@]}"show a way to assign a command into an array and run such array as command and its parameters. Line25then includes a pipe just to demonstrate thatset -o pipefailworks if you pass a command that fails.Script directory reference

Sometimes it’s very useful to access other files in the same directory where the executed shell script resides in. Line

11DIR=$(dirname "$(readlink -f "$0")")basically gives the absolute path to the current shell script directory. The order ofreadlinkanddirnamecommands can be changed depending if you want to access the directory where the command to execute this script points to or where the script actually resides in. These can be different locations if there is a symbolic link to the script. The order shown above points to the directory where the script is physically located at.Quotes are not always needed

Normally in bash if you reference variables without enclosing them into quotes, you risk of shell injection attacks. But when assigning variables in bash, you don’t need to have quotes in the assignment as long as you don’t use spaces. The same applies to command substitution (some caveats with variable declarations apply). This style is demonstrated on lines

7ITERATIONS=$1and11DIR=$(...).Shellcheck your scripts

One cool program that will make you a better shell script writer is ShellCheck. It basically has a list of issues in shell scripts that it detects and can notify you whenever it notices issues that should be fixed. If you are writing shell scripts as part of some version controlled project, you should add an automatic ShellCheck verification step for all shell scripts that you put into your repository.

-

A succesful Git branching model considered harmful

Update 2018-07. The branching model described here is called trunk based development. I and other people who I collaborated with did not know about the articles that used this name. Nowadays there are excellent web resources about the subject, like trunkbaseddevelopment.com. They have a lot of material about this and other key subjects revolving around the area of efficient software development.

When people start to use git and get introduced to branches and to the ease of branching, they may do couple of Google searches and very often end up on a blog post about A successful Git branching model. The biggest issue with this article is that it comes up as one of the first ones in many git branching related searches when it should serve as a warning how not to use branches in software development.

What is wrong with “A successful Git branching model”?

To put it bluntly, this type of development approach where you use shared remote branches for everything and merge them back as they are is much more complicated than it should be. The basic principle in making usable systems is to have sane defaults. This branching model makes that mistake from the very beginning by not using the

masterbranch for something that a developer who clones the repository would expect it to be used, development.Using individual (long lived) branches for features also make it harder to ensure that everything works together when changes are merged back together. This is especially pronounced in today’s world where continuous integration should be the default practice of software development regardless how big the project is. By integrating all changes together regularly you’ll avoid big integration issues that waste a lot of time to resolve, especially for bigger projects with hundreds or thousands of developers. This type of development practice where every feature is developed in its own shared remote branch drives the process naturally towards big integration issues instead of avoiding them.

Also in “A successful Git branching model” merge commits are encouraged as the main method for integrating changes. I will explain next why merge commits are bad and what you will lose by using them.

What is wrong with merge commits?

“A successful Git branching model” talks how non-fast-forward merge commits can be thought as a way to keep all commits related to a certain feature nicely in one group. Then if you decide that a feature is not for you, you can just revert that one commit and have the whole feature removed. I would argue that this is a really rare situation that you revert a feature or that you even get it done completely right on the first try.

Merges in git very often create additional commits that begin with the message that looks like following: “

Merge branch 'some-branch' of git://git.some.domain/repository/”. That does not provide any value when you want to see what has actually changed. You need go to the commit message and read what happens there, probably in the second paragraph. Not to mention going back in history to the branch and trying to see what happens in that branch.Having non-linear history also makes git bisect harder to do when issues are only revealed during integration. You may have both of the branches good individually but then the merge commit fails because your changes don’t conflict. This is not even that hard to encounter when one developer changes some internal interface and other developer builds something new based on the old interface definition. These kind of can be easy or hard to figure out, but having the history linear without any merge commits could immediately point out the commit that causes issues.

Something more simple

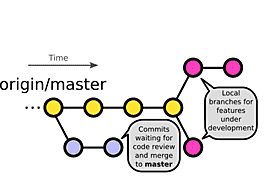

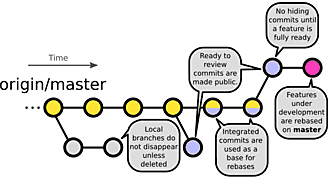

Figure 1: The cactus model Let me show a much more simple alternative, that we can call the cactus model. It gets the name from the fact that all branches branch out from a wide trunk (

masterbranch) and never get merged back. Cactus model should reflect much better the way that comes up naturally when working with git and making sure that continuous integration principles are used.In figure 1 you can see the principle how the cactus branching model works and following sections explain the reasoning behind it. Some principles shown here may need Gerrit or similar integrated code review and repository management system to be fully usable.

All development happens on the master branch

masterbranch is the default that is checked out aftergit clone. So why not also have all development also happen there? No need to guess or needlessly document the development branch when it is the default one. This only applies to the central repository that is cloned and kept up to date by everyone. Individual developers are encouraged to use local branches for development but avoid shared remote branches.Developers should

git rebasetheir changes regularly so that their local branches would follow the latestorigin/master. This is to make sure that we do not develop on an outdated baseline.Using local branches

Cactus model does not to discourage using branches when they are useful. Especially an individual developer should use short lived feature branches in their local repository and integrate them with the

origin/masterwhenever there is something that can be shared with everyone else. Local branches are just to make it more easy to move between features while commits are tested or under code review.Figures 2 and 3 show the basic principle of local branches and rebases by visualizing a tree state. In figure 2 we have a situation with two active local development branches (fuchsia circles) and one branch that is under code review (blue circles) and ready to be integrated to

origin/master(yellow circles). In figure 3 we have updated theorigin/masterwith two new commits (yellow-blue circles) and submitted two commits for code review (blue circle) and consider them to be ready for integration. As branches don’t automatically disappear from the repository, the integrated commits are still in the local repository (gray circles), but hopefully forgotten and ready to be garbage collected.Shared remote branches

As a main principle, shared remote branches should be avoided. All changes should be made available on

origin/masterand other developers should build their changes on top of that by continuously updating their working copies. This ensures that we do not end up in integration hell that will happen when many feature branches need to be combined at once.If you use staged code review system, like Gerrit or github, then you can just

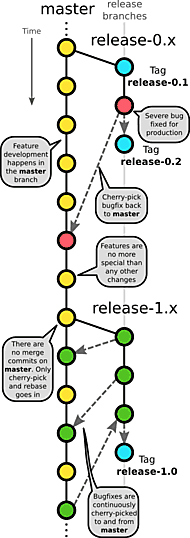

git fetchthe commit chain and build on top of that. Thengit pushyour changes to your own repository to some specific branch, that you have hopefully rebased on top of theorigin/masterbefore pushing.Releases are branched out from origin/master

Releases get their own tags or branches that are branched out from

origin/master. In case we need a hotfix, just add that to the release branch and cherry-pick it to the master branch, if applicable. By using some specific tagging and branching naming scheme should enable for automatic releases but this should be completely invisible to developers in their daily work.There are only fast-forward merges

git mergeis not used. Changes go toorigin/masterby usinggit rebaseorgit cherry-pick. This avoids cluttering the repository with merge commits that do not really provide any real value and avoids christmas tree look on the repository. Rebasing also makes the history linear, so thatgit bisectis really easy to use for finding regressions.If you are using Gerrit, you can also use cherry-pick submit strategy. This simply enables putting a collection of commits to

origin/masterat any desired order instead of having to settle for the order decided when commits were first put for a code review.Concluding remarks

Git is really cool as a version control system. You can do all kinds of nifty stuff with it really easily that was hard or impossible to do. Branches are just pointers to certain commits and that way you can create a branch really cheaply from anything. Also you can do all kinds of fancy merges and this makes using and mixing branches very easy. But as with all tools, branches should be used appropriately to make it more easy for developers to their daily development tasks, not harder by default.

I have also seen these kind of scary development practices to be used in projects with hundreds of developers when moving to git from some other version control systems. Some organizations even take this as far as they fully remove the

masterbranch from the central repository and create all kinds of questions and obstacles by not having a sane default that is expected by anyone who has used git anywhere else. -

Starting a software development blog

Isn’t it a nice way to start a software development related blog by talking about how to start a software development related blog? Or at least it goes one up in the meta blogging level where there is an article about what needs taken care of when creating a software development related blog that starts with how meta this is.

Aren’t there tons of articles regarding this already?

There are tons of articles about how to establish a blog. Some of them are very good at giving generic advice on topics and blogging platform selection, and you should use your favorite search engine to search for those. I’m just going to explain my reasoning for choices I have made when establishing this blog with my current understanding and background research.

Why blog at all?

Why should I blog at all? Blogging takes time and effort and I will probably lose interest in it after some weeks/months/years. The biggest reason for me to start blogging is that I find myself very often explaining things through email discussions, wikis, or chats, sometimes the same things multiple times. Also sometimes I just do benchmarks and other types of digging and comparison, and I would like to have a place to present these findings where I can easily link to.

What I’m not after here is being a part of some specific blogging community. The second thing that I try to avoid is discussing topics that are not software development related, or just share some links with a very short comment on them. Those things I leave for Facebook and Internet relay chat type of communication platforms.

Coming up with ideas

I have collected ideas and drafts that I could write about for almost a year now. And there are over a dozen different smaller or larger article ideas in my backlog. So at least in the beginning I have some material available. The biggest question is that do I find this interesting enough to continue doing and how often do I come up with new ideas that I could blog about.

Deciding the language I write articles in

I have decided to write my blog in English. It’s not my first, or even my second language, so why write a blog in English? The reason for this is that software development topics come up naturally for me in English. Also people who may be interested in these topics basically have to know English to have any competence in this industry and I’m not aiming at complete beginners.

Selecting a blogging platform

I had a couple of requirements for a blogging platform that it should satisfy so it would fit my needs:

- Hosted by a third party. I have hosted my own web server and it requires somewhat constant maintenance and I want to avoid that. Especially I don’t want to have constant security issues.

- To be able to write articles locally on my computer and then easily export the result to the blog. Also as articles generally take quite a few trials and errors, they should be easy to modify.

- Support source code listings and syntax coloring.

- Be able to upload files of any type to the platform and link to them. I know that I would like to produce source code, so why not give out larger programs directly as files without having to list all the source code in the article?

- These files should be easy to modify as, as mentioned before, articles take some iterations to be complete.

First I was thinking about using Blogger as my blogging platform, as quite a few blogs that I follow use it as their platform. But I wasn’t convinced that the transfer of files from local machine to Blogger service would be the most convenient, especially with images and other types of files. Then I remembered that some people have pages on GitHub and stumbled upon Jekyll that creates static files and is aimed specifically for this kind of content creation. So that’s what I decided to use for now.

Jekyll does require some work to be set up and probably to maintain, but at least it frees me to do the content in any way I like to. Many of the things that I also need to currently do manually would probably have been taken care of by some plugins on third party blogging services. But that’s the price of doing this in a more customizable way.

Coming up with some basic information

Should I add a personal biography to the blog, or should people who have not met me know me just by the name and the content that I write? I have decided to write a little bit about myself that would hopefully shed a light on who I am. But I have decided not to use the author page as my curriculum vitae, as it would get too long and boring.

Deciding how this blog should look and feel

As a general rule when making personal web pages, I try to make them load fast and avoid images. And with this blog, I just took the default Jekyll theme and started customizing it in certain aspects where I want to have more information visible. As new devices with different resolutions come into market and browsers get updated, I may need to update this to something that better supports the reactive nature of the website that I hope to have.

One interesting direction where web development is going into regarding the pages optimized for mobile devices is to have accelerated mobile pages of the content. Currently I have decided to write as much content as possible in Markdown that is processed by kramdown Markdown processor. It doesn’t natively support accelerated mobile pages yet, so I have opted out from using accelerated mobile pages for now. In the future it would be really nice to have lazy image loading and support for responsive images with different sizes and device support.

Favicon

Favicon is a small image that should make a site stand out from rest when you have multiple tabs open in a browser, bookmarks, and when creating icons of websites/applications to a device or operating system. I had made a favicon for my web site ages ago. I still have the original high resolution version, so now I decided to see what kind of different formats and sizes there are for these icons. Fortunately I didn’t have to look too much, as I found a website called Real Favicon Generator.net that would take care of converting this icon to different formats for me.

I was a little blown away by how many different targets there are for these kinds of icons. The generated file collection included 27 different images and 2 metadata files in addition to code to add to a website header. A current list of them looks like this:

- Favicon for website in different sizes and formats.

- Safari’s pinned tabs.

- Windows 8 and Windows 10 pinned website tiles

- Android home screen icon

- iOS home screen icon

In a couple of years we probably have new systems and resolutions to support. So I need to regenerate these favicons again to support those new systems.

Responsive images

Some images that I produce are originally made with Inkscape in vector graphics format. Unfortunately the browser support for vector graphics formats is appalling, the next best option is to use responsive images that provide different resolution versions of the image based on the display device capabilities. Every vector graphic image has 6 versions (100%, 125%, 150%, 200%, 300%, 400%, and PDF) of it generated, which can then be used in srcset image attribute which enables devices with higher density screens to get more crisp images. Higher density images are also used in modern browsers when the page is zoomed. PDF versions are just used in clickable links, as PDF files as images don’t really have that good of a browser support yet.

Optimize everything!

When writing content or doing anything with the layout, I naturally want to have them as easy to edit as possible. That also means that if I don’t minify HTML, CSS, images and other often rendered resources on the page, I will lose on page load time. I would like the Jekyll plugins to optimize everything that is produced, but unfortunately only Sass/SCSS assets provide easy minifying options. So page HTML is not minified, even though in the front page’s case it could reduce its size by some percents.

Every PNG image on this blog is optimized by using ZopfliPNG. It produces around 10% smaller images than OptiPNG, that is the standard open source PNG optimizer. ZopfliPNG takes its time, especially on larger images, but as the optimization only needs to be done just before publishing, it’s worth doing, as it can easily save 10–50% in image size. Also if PNG images are not meant to be as high quality representations as possible of something, they have their color space reduced by pngquant if the color space reduction does not result in a significant quality deterioration. This can result in savings excess of 50% while still keeping the image format appropriate for line drawings and other high frequency image data.

Every article has its own identicon and that would result in an extra request if I were to add its as a simple image. Instead I embed it to the page source by using base64 encoded data URI scheme for the around 200 bytes that these page identifier images result in. I could shave off some bytes by selecting between image formats that take the least space to encode the same information. I don’t think that I need to go that far, as I usually have far more text on a page than what images usually take.

Another place that I can optimize is to move all the content that is not required for initial page rendering at the end of the page. This it also possible to load some content asynchronously after the page has initially loaded itself. This mostly means that I have moved all Javascript this page has to the end of the page. Also Google’s PageSpeed Insights gives good hints on what else to optimize and one place to optimize is to either inline or load CSS asynchronously. Unfortunately Jekyll has not made it easy to include the generated CSS file as a part of the document so instead of fighting it, I have opted to have it as a separate file. The second option to load that CSS file asynchronously unfortunately creates an annoying effect when the page is loaded and then the style sheets are applied due to the hamburger button, so I left the main style sheet to be synchronously loaded in the header.

One place to still optimize would be the style sheets that these pages have, but as this layout should be lightweight enough already, I hope that I can survive without having to optimize the things that affect layout rendering.

The front page of this blog has 5 articles with full content visible. I have opted for that option simply because I have noticed that I like reading blogs where I don’t need to open any pages to get to the content. Having less articles per page would make the front page load faster and more articles would help in avoiding page switches, so I hope that this is a decent compromise.

All size optimizations are, in the end, pretty much overwhelmed by just adding Disqus as a commenting platform, as it loads much more data than an average article and also includes dozens of different files that a browser needs to fetch.

Two-way communication

One of the powerful features of any blogging platform is that they enable context related two-way communication between the author and the readers. This means that some blogs have comments. As I’m using static pages, the easiest solution is just to leave the commenting options out. But I decided to opt in for Disqus as a commenting system for now, especially because there is a nice post explaining what you need to do with Jekyll to use Disqus on GitHub pages.

Disqus has its haters and issues, but I think that it’s the best bet for now. If I spend most of my time deleting spammy comments, I will then probably just abandon commenting features altogether and let people comment on Twitter or by using some other means, if they feel that’s necessary and are interested enough.

Linking to myself

As I have some presence on multiple social media sites and some personal project resources, I have decided to include the most current ones in the footer of the page.

Is there actually anyone who reads my blog?

As I have opted to use a third party web hosting service with this, I don’t have access to their logs and therefore can’t really know how many page loads I get. So I take the second option and use full blown Google Analytics service for this blog. It also provides much better data analysis features than any of the web service log analyzers that I have used, so at least by using it I should have some kind of an idea how many people actually visit this blog.

Also using FeedBurner should provide me statistics on how many people have subscribed to my blog.

Article feeds

For me article feeds have two purposes: to provide the subscribers of this blog an easy access to new articles and for me some kind of an idea how many people are regularly reading this blog. Displaying the full article content in the feed is the approach that I like when reading my feed subscriptions, as I can read the whole article in the feed reader. I decided to use a third party feed service, FeedBurner to get feed subscriber statistics. FeedBurner also provides services I can use to further modify my feed, like making it more compatible with different devices and feed readers.

Adding a search

One thing with a website with a lot of content is that it should be possible to find the content. One way is to add a search to the website, but it’s not that easy to do if the website is a collection of static pages. I have now decided to opt out of adding a separate search widget and hope that the article list is enough. If there are any specific articles which interest people, web search engines would bring those up.

Link all the social media!

Adding share buttons for social media services seems like a no-brainer today. I was already exploring AddToAny service to add such buttons to my site, but then I decided to do a little bit of background research and think about the effects of such buttons.

A quick search returns articles that suggest avoiding social media share buttons (1, 2, 3) and question their actual value for the website. I checked that AddToAny creates 3 extra requests on a page load. Although such requests are probably cached in the desktop browser, mobile browsers don’t necessarily have such luck with caching and they will then suffer quite a large extra delay while loading those resources.

Also the share buttons would needlessly clutter my otherwise minimalistic layout, even if I’m not a graphically talented designer to give a professional opinion about this. And also I have had horrible experiences browsing web on a phone where these kinds of social media sharing buttons actually take real estate on the screen and contribute more to already slow mobile experience.

These things taken into account, I won’t be adding social media sharing buttons here, as their downsides seem to be bigger than the upsides.

Adding metadata

Adding metadata to pages should make it more easy to share these articles around, as different services are able to bring out the relevant content on those pages. Major metadata collections for blog posts used all over the web seem to be Facebook’s Open Graph objects, Twitter Cards, and Rich Snippets for Articles that Google uses. To use all of these, you need to have the following information available:

- Information about the author:

- Name

- Web page

- Twitter handle

- Information about the organization that produces this content:

- Name

- Logo

- Twitter handle

- Information about the article:

- Title

- Publishing date

- Modified date

- Unique per article image, different sizes

- Article URL

- Description/excerpt

- Tags/keywords

Not all of this information is used in all metadata collections, but you need to have that much data available if you want to use all these three services. Fortunately these services provide metadata validators:

Unique article images

The thing that resulted in the most issues was to get an unique per article image done. That might make sense for news articles, but when there are software development related articles about abstract matters, it’s pretty hard to come up with images that do not look out of place or are just completely made up. So I decided to completely make up images that are generated automatically, if there is nothing better specified.

I’m using identicon type of approach by creating such 4 icons from SHA-1 of article’s path (“/2016/01/software-development-blog” for this one). Then these icons are either combined horizontally or to a 2x2 square grid and that way they are used to create article images that are appropriate for different media. It’s a crude trick, but at least with a very high probability it generates a unique per article image that can be visually identified. For example this article has these identifier images:

400x400 square identicon for this article.

800x200 horizontal identicon for this article. Monetizing blogging

There are various ways how one can monetize blogging, of which ads are usually the most visible one. As I’m just starting and I don’t have any established readership, it would be a waste of my time to add any advertisements on these pages. If I get to 1000 readers monthly, then I will probably add some very lightweight ads, as that way I could use the money for one extra chocolate bar per month.

How timeless is this?

Probably in 5 years, I have lost interest in blogging. Most of the pages that I’m now linking into are gone. Many of the subjects will be outdated either by technology going forwards or development methods going forward. Jekyll will be either in maintenance mode or a completely abandoned project. If I continue blogging, I might even have started using some real blogging service or some other blogging platform.

Conclusion

I have not actively been involved in web development during the last couple of years and this provided me an excellent opportunity to see that what kind of web development related advances and changes there have been. The biggest surprise comes from the metadata usage, and how many different systems expect to have icons in different sizes and formats.

I generally try to put usability and speed before looking fancy. In this blog, however the need for speed is not always satisfied, as having the commenting system hosted outside adds a lot of extra data to transfer and makes these pages more heavy for low power devices.

subscribe via RSS